Learning Debiased Models with Dynamic Gradient Alignment and Bias-conflicting Sample Mining

Paper and Code

Nov 25, 2021

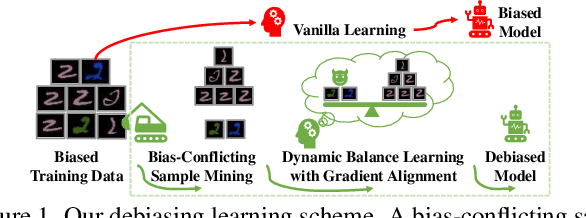

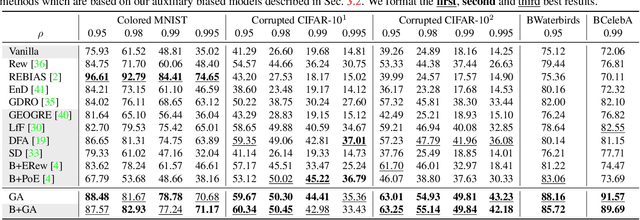

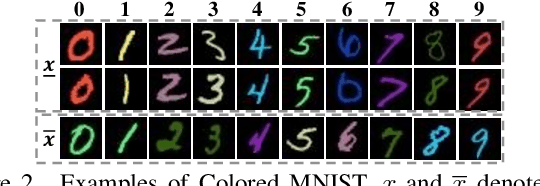

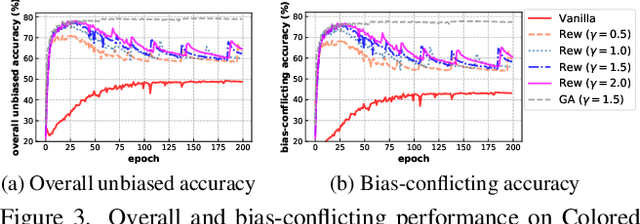

Deep neural networks notoriously suffer from dataset biases which are detrimental to model robustness, generalization and fairness. In this work, we propose a two-stage debiasing scheme to combat against the intractable unknown biases. Starting by analyzing the factors of the presence of biased models, we design a novel learning objective which cannot be reached by relying on biases alone. Specifically, debiased models are achieved with the proposed Gradient Alignment (GA) which dynamically balances the contributions of bias-aligned and bias-conflicting samples (refer to samples with/without bias cues respectively) throughout the whole training process, enforcing models to exploit intrinsic cues to make fair decisions. While in real-world scenarios, the potential biases are extremely hard to discover and prohibitively expensive to label manually. We further propose an automatic bias-conflicting sample mining method by peer-picking and training ensemble without prior knowledge of bias information. Experiments conducted on multiple datasets in various settings demonstrate the effectiveness and robustness of our proposed scheme, which successfully alleviates the negative impact of unknown biases and achieves state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge