Learning and Transferring Value Function for Robot Exploration in Subterranean Environments

Paper and Code

Apr 07, 2022

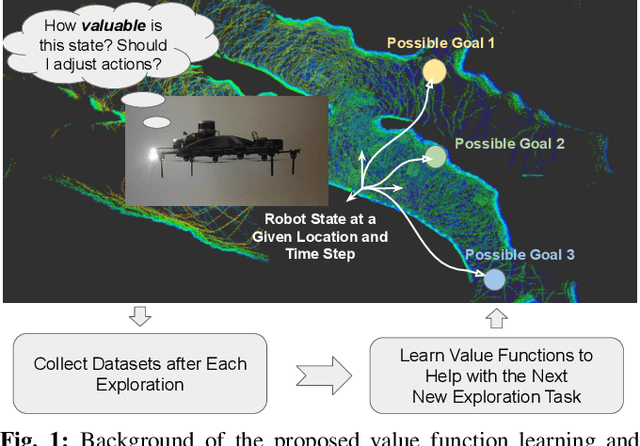

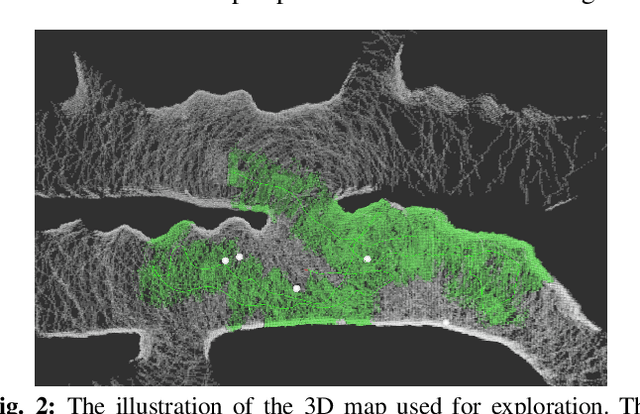

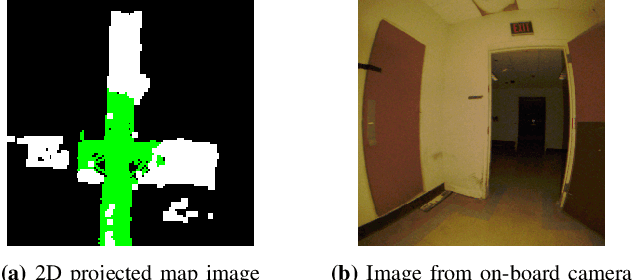

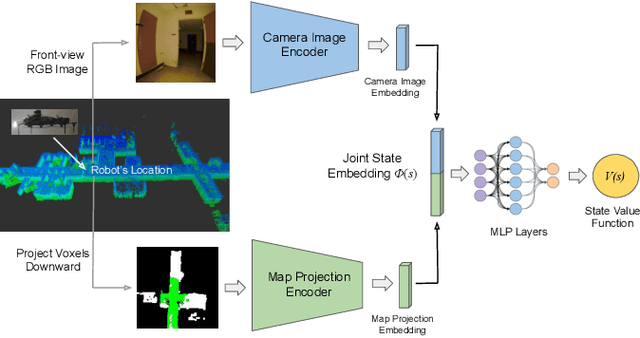

In traditional robot exploration methods, the robot usually does not have prior biases about the environment it is exploring. Thus the robot assigns equal importance to the goals which leads to insufficient exploration efficiency. Alternative, often a hand-tuned policy is used to tweak the value of goals. In this paper, we present a method to learn how "good" some states are, measured by the state value function, to provide a hint for the robot to make exploration decisions. We propose to learn state value functions from previous offline collected datasets and then transfer and improve the value function during testing in a new environment. Moreover, the environments usually have very few and even no extrinsic reward or feedback for the robot. Therefore in this work, we also tackle the problem of sparse extrinsic rewards from the environments. We design several intrinsic rewards to encourage the robot to obtain more information during exploration. These reward functions then become the building blocks of the state value functions. We test our method on challenging subterranean and urban environments. To the best of our knowledge, this work for the first time demonstrates value function prediction with previous collected datasets to help exploration in challenging subterranean environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge