Learn Task First or Learn Human Partner First? Deep Reinforcement Learning of Human-Robot Cooperation in Asymmetric Hierarchical Dynamic Task

Paper and Code

Mar 01, 2020

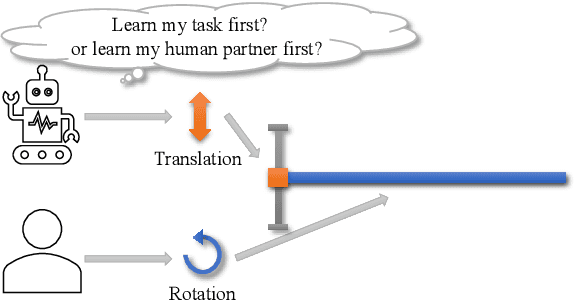

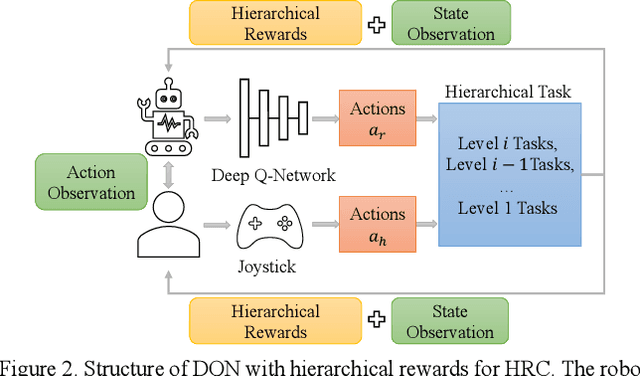

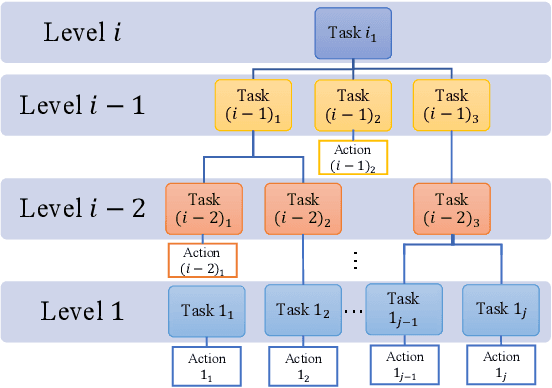

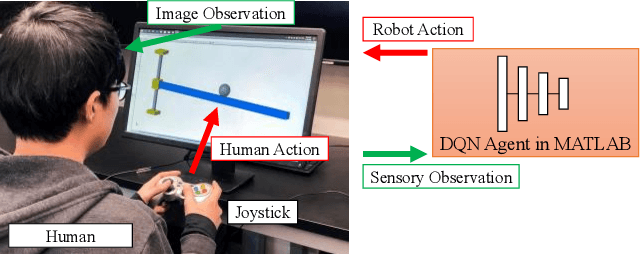

The deep reinforcement learning method for human-robot cooperation (HRC) is promising for its high performance when robots are learning complex tasks. However, the applicability of such an approach in a real-world context is limited due to long training time, additional training difficulty caused by inconsistent human performance and the inherent instability of policy exploration. With this approach, the robot has two dynamics to learn: how to accomplish the given physical task and how to cooperate with the human partner. Furthermore, the dynamics of the task and human partner are usually coupled, which means the observable outcomes and behaviors are coupled. It is hard for the robot to efficiently learn from coupled observations. In this paper, we hypothesize that the robot needs to learn the task separately from learning the behavior of the human partner to improve learning efficiency and outcomes. This leads to a fundamental question: Should the robot learn the task first or learn the human behavior first (Fig. 1)? We develop a novel hierarchical rewards mechanism with a task decomposition method that enables the robot to efficiently learn a complex hierarchical dynamic task and human behavior for better HRC. The algorithm is validated in a hierarchical control task in a simulated environment with human subject experiments, and we are able to answer the question by analyzing the collected experiment results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge