LCS-DIVE: An Automated Rule-based Machine Learning Visualization Pipeline for Characterizing Complex Associations in Classification

Paper and Code

Apr 26, 2021

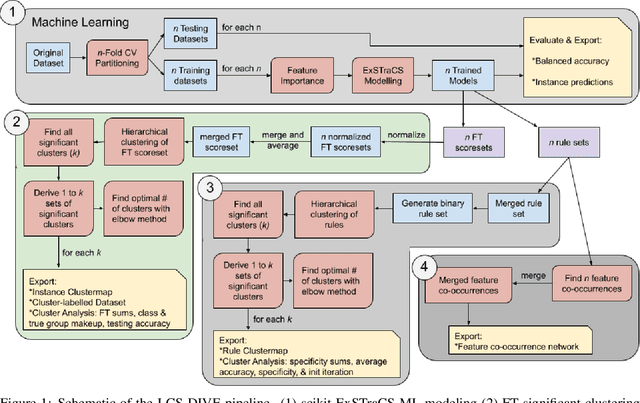

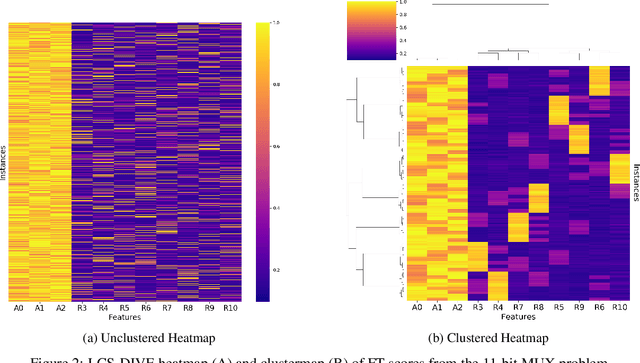

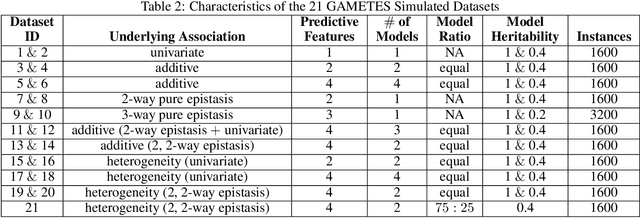

Machine learning (ML) research has yielded powerful tools for training accurate prediction models despite complex multivariate associations (e.g. interactions and heterogeneity). In fields such as medicine, improved interpretability of ML modeling is required for knowledge discovery, accountability, and fairness. Rule-based ML approaches such as Learning Classifier Systems (LCSs) strike a balance between predictive performance and interpretability in complex, noisy domains. This work introduces the LCS Discovery and Visualization Environment (LCS-DIVE), an automated LCS model interpretation pipeline for complex biomedical classification. LCS-DIVE conducts modeling using a new scikit-learn implementation of ExSTraCS, an LCS designed to overcome noise and scalability in biomedical data mining yielding human readable IF:THEN rules as well as feature-tracking scores for each training sample. LCS-DIVE leverages feature-tracking scores and/or rules to automatically guide characterization of (1) feature importance (2) underlying additive, epistatic, and/or heterogeneous patterns of association, and (3) model-driven heterogeneous instance subgroups via clustering, visualization generation, and cluster interrogation. LCS-DIVE was evaluated over a diverse set of simulated genetic and benchmark datasets encoding a variety of complex multivariate associations, demonstrating its ability to differentiate between them and then applied to characterize associations within a real-world study of pancreatic cancer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge