Layer-Wise Adaptive Updating for Few-Shot Image Classification

Paper and Code

Jul 16, 2020

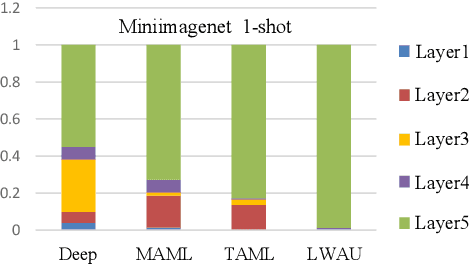

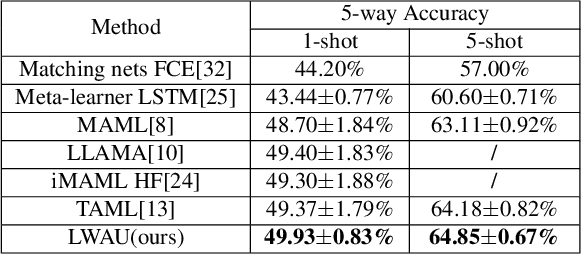

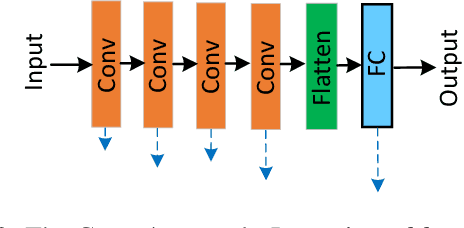

Few-shot image classification (FSIC), which requires a model to recognize new categories via learning from few images of these categories, has attracted lots of attention. Recently, meta-learning based methods have been shown as a promising direction for FSIC. Commonly, they train a meta-learner (meta-learning model) to learn easy fine-tuning weight, and when solving an FSIC task, the meta-learner efficiently fine-tunes itself to a task-specific model by updating itself on few images of the task. In this paper, we propose a novel meta-learning based layer-wise adaptive updating (LWAU) method for FSIC. LWAU is inspired by an interesting finding that compared with common deep models, the meta-learner pays much more attention to update its top layer when learning from few images. According to this finding, we assume that the meta-learner may greatly prefer updating its top layer to updating its bottom layers for better FSIC performance. Therefore, in LWAU, the meta-learner is trained to learn not only the easy fine-tuning model but also its favorite layer-wise adaptive updating rule to improve its learning efficiency. Extensive experiments show that with the layer-wise adaptive updating rule, the proposed LWAU: 1) outperforms existing few-shot classification methods with a clear margin; 2) learns from few images more efficiently by at least 5 times than existing meta-learners when solving FSIC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge