Latent Feature Relation Consistency for Adversarial Robustness

Paper and Code

Mar 29, 2023

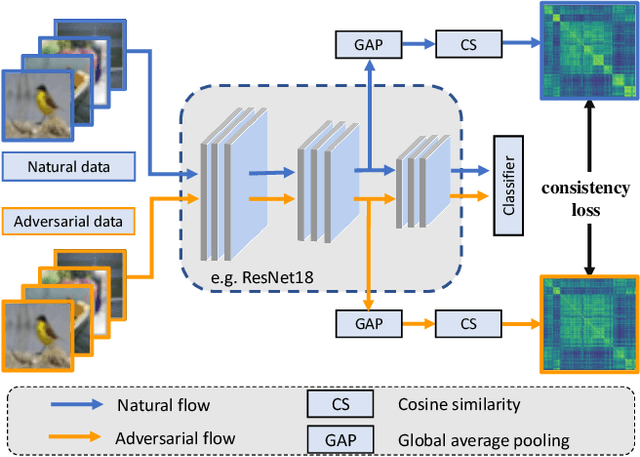

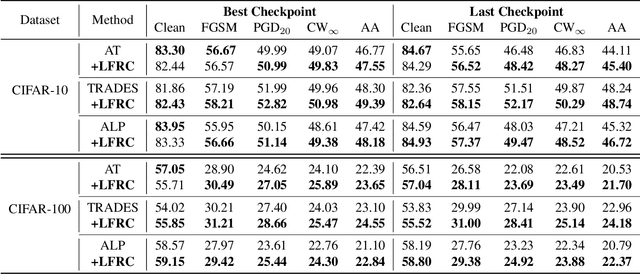

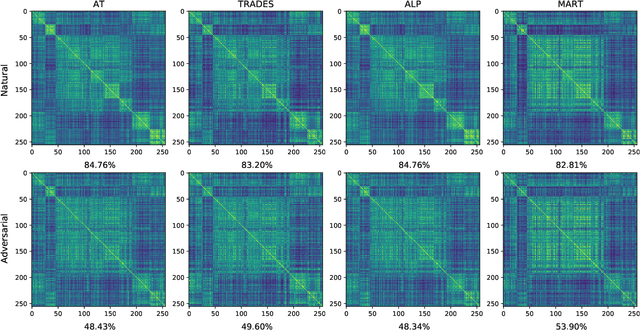

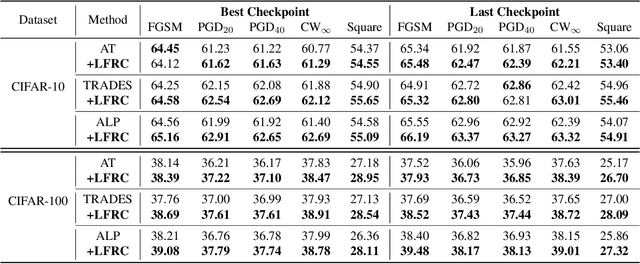

Deep neural networks have been applied in many computer vision tasks and achieved state-of-the-art performance. However, misclassification will occur when DNN predicts adversarial examples which add human-imperceptible adversarial noise to natural examples. This limits the application of DNN in security-critical fields. To alleviate this problem, we first conducted an empirical analysis of the latent features of both adversarial and natural examples and found the similarity matrix of natural examples is more compact than those of adversarial examples. Motivated by this observation, we propose \textbf{L}atent \textbf{F}eature \textbf{R}elation \textbf{C}onsistency (\textbf{LFRC}), which constrains the relation of adversarial examples in latent space to be consistent with the natural examples. Importantly, our LFRC is orthogonal to the previous method and can be easily combined with them to achieve further improvement. To demonstrate the effectiveness of LFRC, we conduct extensive experiments using different neural networks on benchmark datasets. For instance, LFRC can bring 0.78\% further improvement compared to AT, and 1.09\% improvement compared to TRADES, against AutoAttack on CIFAR10. Code is available at https://github.com/liuxingbin/LFRC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge