Knowledge-aided Federated Learning for Energy-limited Wireless Networks

Paper and Code

Sep 25, 2022

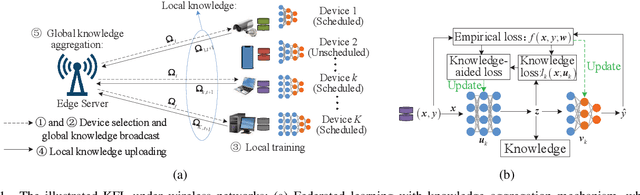

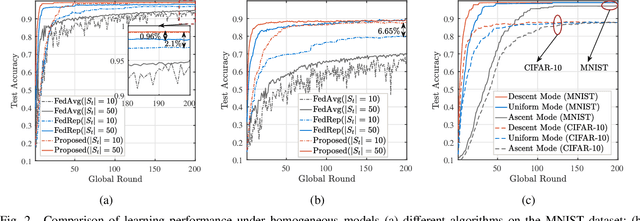

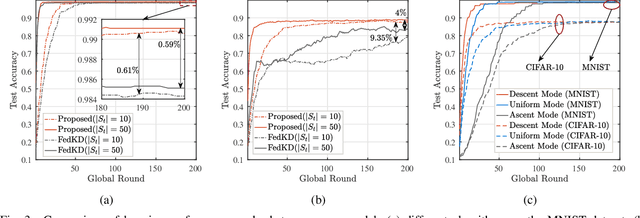

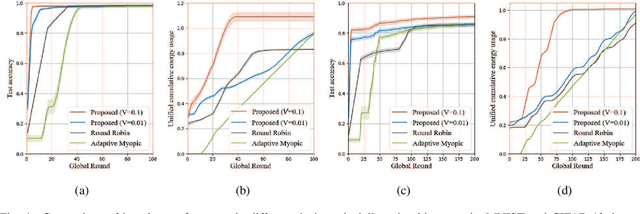

The conventional model aggregation-based federated learning (FL) approaches require all local models to have the same architecture and fail to support practical scenarios with heterogeneous local models. Moreover, the frequent model exchange is costly for resource-limited wireless networks since modern deep neural networks usually have over-million parameters. To tackle these challenges, we first propose a novel knowledge-aided FL (KFL) framework, which aggregates light high-level data features, namely knowledge, in the per-round learning process. This framework allows devices to design their machine learning models independently, and the KFL also reduces the communication overhead in the training process. We then theoretically analyze the convergence bound of the proposed framework under a non-convex loss function setting, revealing that large data volumes should be scheduled in the early rounds if the total data volumes during the entire learning course are fixed. Inspired by this, we define a new objective function, i.e., the weighted scheduled data sample volume, to transform the inexplicit global loss minimization problem into a tractable one for device scheduling, bandwidth allocation and power control. To deal with the unknown time-varying wireless channels, we transform the problem into a deterministic problem with the assistance of the Lyapunov optimization framework. Then, we also develop an efficient online device scheduling algorithm to achieve an energy-learning trade-off in the learning process. Experimental results on two typical datasets (i.e., MNIST and CIFAR-10) under highly heterogeneous local data distribution show that the proposed KFL is capable of reducing over 99% communication overhead while achieving better learning performance than the conventional model aggregation-based algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge