Investigating Human + Machine Complementarity for Recidivism Predictions

Paper and Code

Aug 28, 2018

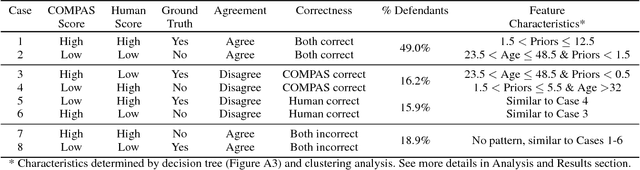

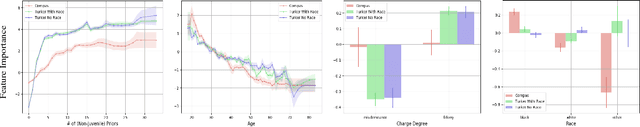

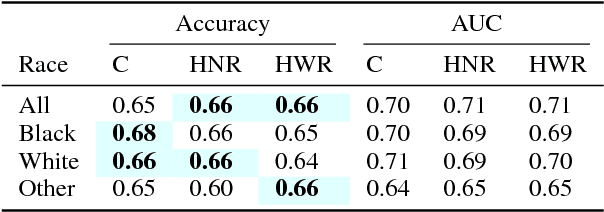

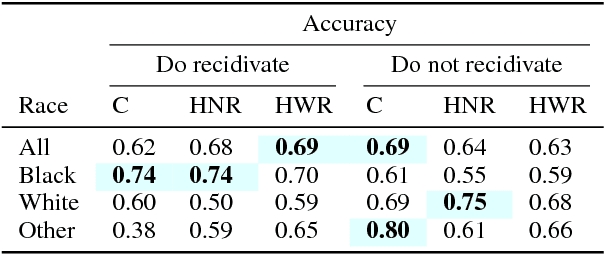

When might human input help (or not) when assessing risk in fairness-related domains? Dressel and Farid asked Mechanical Turk workers to evaluate a subset of individuals in the ProPublica COMPAS data set for risk of recidivism, and concluded that COMPAS predictions were no more accurate or fair than predictions made by humans. We delve deeper into this claim in this paper. We construct a Human Risk Score based on the predictions made by multiple Mechanical Turk workers on the same individual, study the agreement and disagreement between COMPAS and Human Scores on subgroups of individuals, and construct hybrid Human+AI models to predict recidivism. Our key finding is that on this data set, human and COMPAS decision making differed, but not in ways that could be leveraged to significantly improve ground truth prediction. We present the results of our analyses and suggestions for how machine and human input may have complementary strengths to address challenges in the fairness domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge