Interpretable Deepfake Detection via Dynamic Prototypes

Paper and Code

Jun 28, 2020

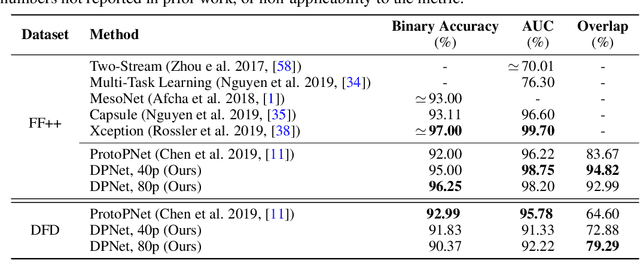

Deepfake is one notorious application of deep learning research, leading to massive amounts of video content on social media ridden with malicious intent. Therefore detecting deepfake videos has emerged as one of the most pressing challenges in AI research. Most state-of-the-art deepfake solutions are based on black-box models that process videos frame-by-frame for inference, and they do not consider temporal dynamics, which are key for detecting and explaining deepfake videos by humans. To this end, we propose Dynamic Prototype Network (DPNet) - a simple, interpretable, yet effective solution that leverages dynamic representations (i.e., prototypes) to explain deepfake visual dynamics. Experiment results show that the explanations of DPNet provide better overlap with the ground truth than state-of-the-art methods with comparable prediction performance. Furthermore, we formulate temporal logic specifications based on these prototypes to check the compliance of our model to desired temporal behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge