Inflating 2D Convolution Weights for Efficient Generation of 3D Medical Images

Paper and Code

Aug 08, 2022

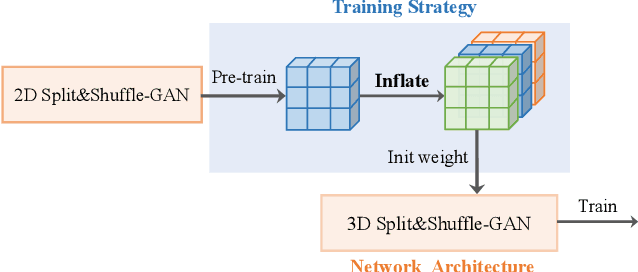

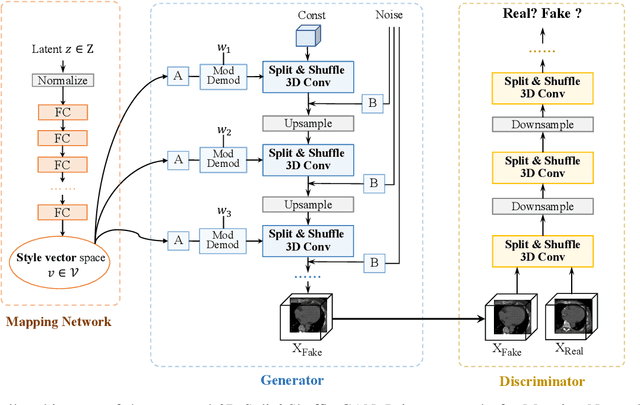

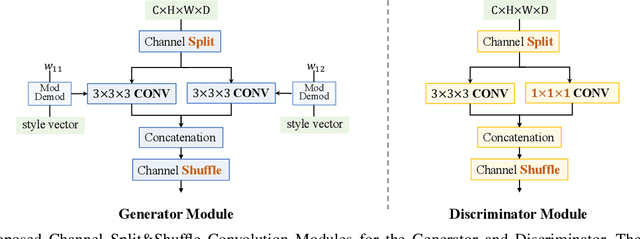

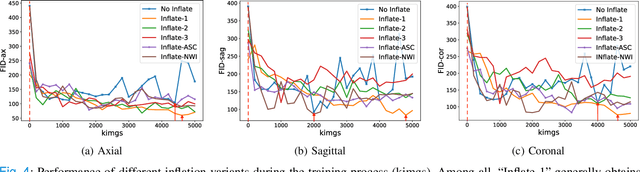

The generation of three-dimensional (3D) medical images can have great application potential since it takes into account the 3D anatomical structure. There are two problems, however, that prevent effective training of a 3D medical generative model: (1) 3D medical images are very expensive to acquire and annotate, resulting in an insufficient number of training images, (2) a large number of parameters are involved in 3D convolution. To address both problems, we propose a novel GAN model called 3D Split&Shuffle-GAN. In order to address the 3D data scarcity issue, we first pre-train a two-dimensional (2D) GAN model using abundant image slices and inflate the 2D convolution weights to improve initialization of the 3D GAN. Novel 3D network architectures are proposed for both the generator and discriminator of the GAN model to significantly reduce the number of parameters while maintaining the quality of image generation. A number of weight inflation strategies and parameter-efficient 3D architectures are investigated. Experiments on both heart (Stanford AIMI Coronary Calcium) and brain (Alzheimer's Disease Neuroimaging Initiative) datasets demonstrate that the proposed approach leads to improved 3D images generation quality with significantly fewer parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge