Infinite Mixture Prototypes for Few-Shot Learning

Paper and Code

Feb 12, 2019

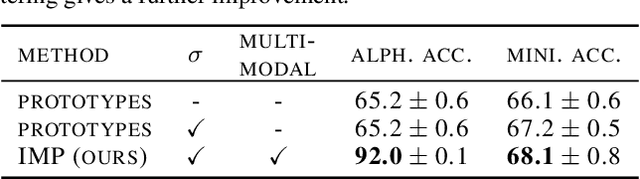

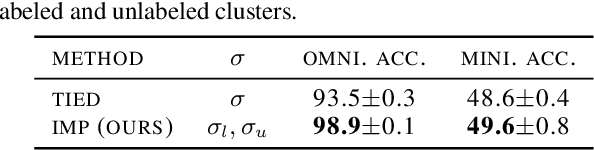

We propose infinite mixture prototypes to adaptively represent both simple and complex data distributions for few-shot learning. Our infinite mixture prototypes represent each class by a set of clusters, unlike existing prototypical methods that represent each class by a single cluster. By inferring the number of clusters, infinite mixture prototypes interpolate between nearest neighbor and prototypical representations, which improves accuracy and robustness in the few-shot regime. We show the importance of adaptive capacity for capturing complex data distributions such as alphabets, with 25% absolute accuracy improvements over prototypical networks, while still maintaining or improving accuracy on the standard Omniglot and mini-ImageNet benchmarks. In clustering labeled and unlabeled data by the same clustering rule, infinite mixture prototypes achieves state-of-the-art semi-supervised accuracy. As a further capability, we show that infinite mixture prototypes can perform purely unsupervised clustering, unlike existing prototypical methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge