Inference-to-complete: A High-performance and Programmable Data-plane Co-processor for Neural-network-driven Traffic Analysis

Paper and Code

Nov 01, 2024

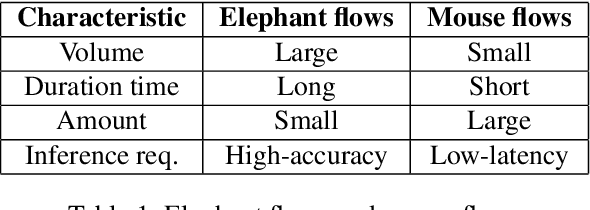

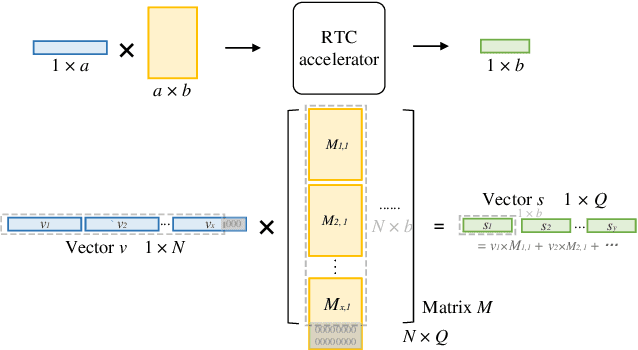

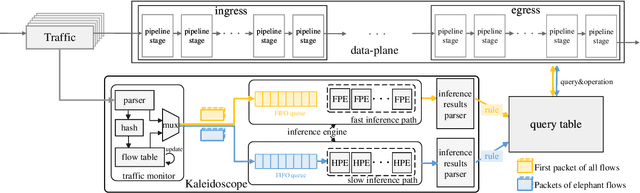

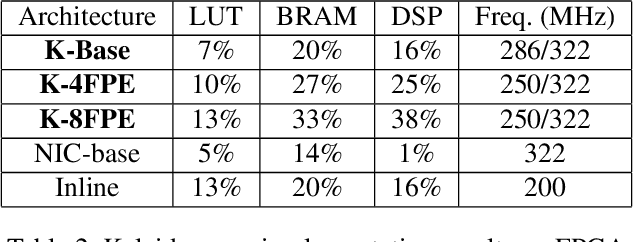

Neural-networks-driven intelligent data-plane (NN-driven IDP) is becoming an emerging topic for excellent accuracy and high performance. Meanwhile we argue that NN-driven IDP should satisfy three design goals: the flexibility to support various NNs models, the low-latency-high-throughput inference performance, and the data-plane-unawareness harming no performance and functionality. Unfortunately, existing work either over-modify NNs for IDP, or insert inline pipelined accelerators into the data-plane, failing to meet the flexibility and unawareness goals. In this paper, we propose Kaleidoscope, a flexible and high-performance co-processor located at the bypass of the data-plane. To address the challenge of meeting three design goals, three key techniques are presented. The programmable run-to-completion accelerators are developed for flexible inference. To further improve performance, we design a scalable inference engine which completes low-latency and low-cost inference for the mouse flows, and perform complex NNs with high-accuracy for the elephant flows. Finally, raw-bytes-based NNs are introduced, which help to achieve unawareness. We prototype Kaleidoscope on both FPGA and ASIC library. In evaluation on six NNs models, Kaleidoscope reaches 256-352 ns inference latency and 100 Gbps throughput with negligible influence on the data-plane. The on-board tested NNs perform state-of-the-art accuracy among other NN-driven IDP, exhibiting the the significant impact of flexibility on enhancing traffic analysis accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge