Incremental Reinforcement Learning --- a New Continuous Reinforcement Learning Frame Based on Stochastic Differential Equation methods

Paper and Code

Aug 08, 2019

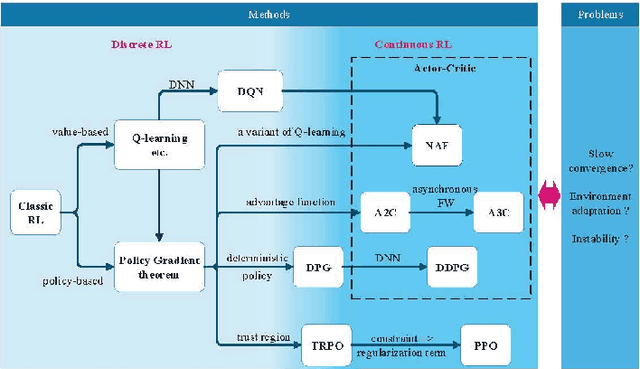

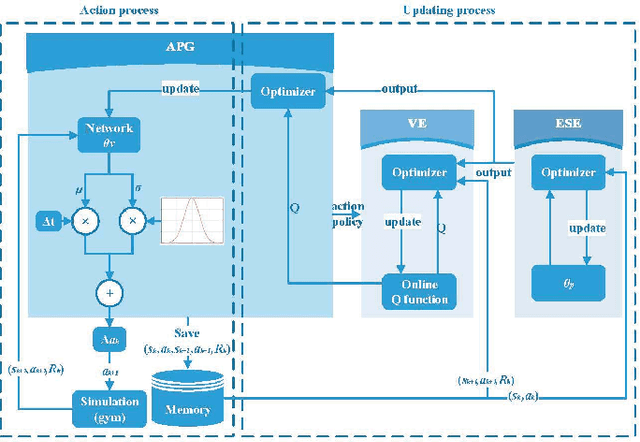

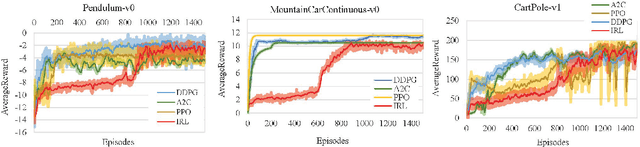

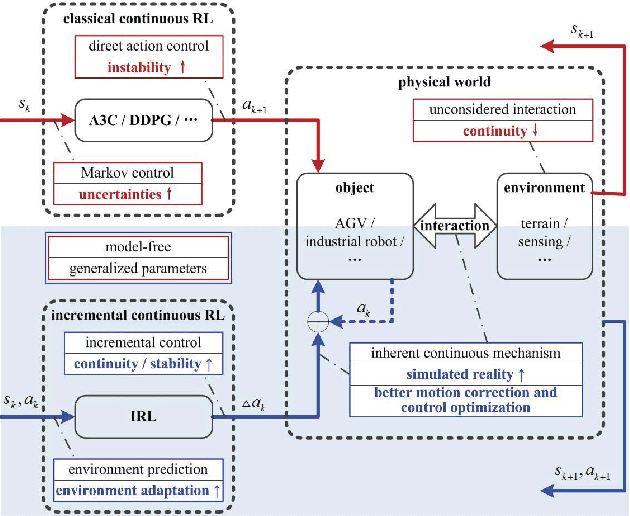

Continuous reinforcement learning such as DDPG and A3C are widely used in robot control and autonomous driving. However, both methods have theoretical weaknesses. While DDPG cannot control noises in the control process, A3C does not satisfy the continuity conditions under the Gaussian policy. To address these concerns, we propose a new continues reinforcement learning method based on stochastic differential equations and we call it Incremental Reinforcement Learning (IRL). This method not only guarantees the continuity of actions within any time interval, but controls the variance of actions in the training process. In addition, our method does not assume Markov control in agents' action control and allows agents to predict scene changes for action selection. With our method, agents no longer passively adapt to the environment. Instead, they positively interact with the environment for maximum rewards.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge