In the Wild: From ML Models to Pragmatic ML Systems

Paper and Code

Jul 06, 2020

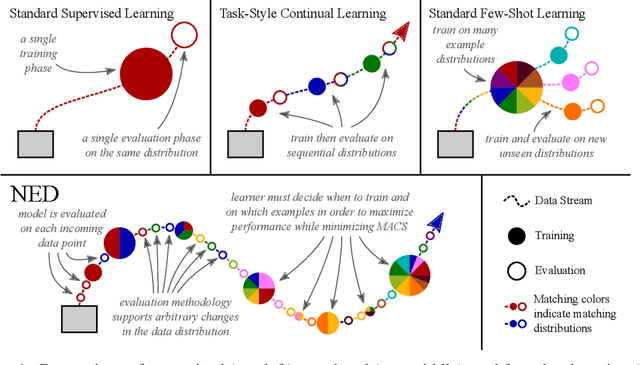

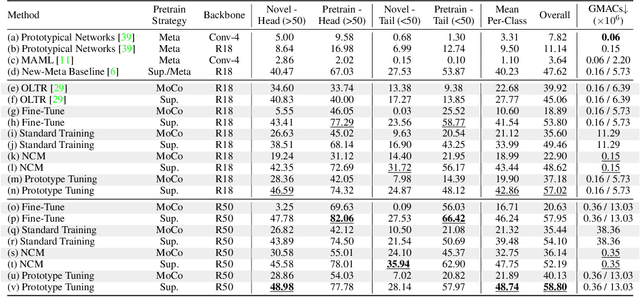

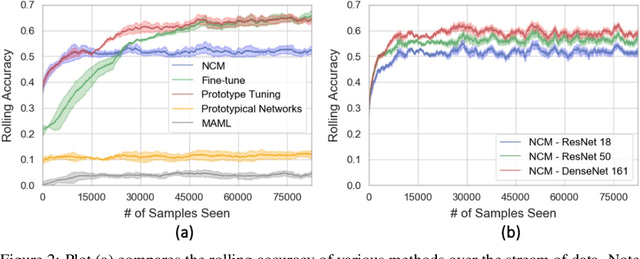

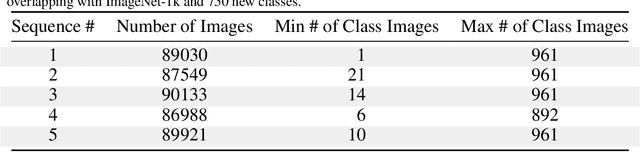

Enabling robust intelligence in the wild entails learning systems that offer uninterrupted inference while affording sustained training, with varying amounts of data & supervision. Such a pragmatic ML system should be able to cope with the openness & flexibility inherent in the real world. The machine learning community has organically broken down this challenging task into manageable sub tasks such as supervised, few-shot, continual, & self-supervised learning; each affording distinctive challenges & leading to a unique set of methods. Notwithstanding this amazing progress, the restricted & isolated nature of these tasks has resulted in methods that excel in one setting, but struggle to extend beyond them. To foster the research required to extend ML models to ML systems, we introduce a unified learning & evaluation framework - iN thE wilD (NED). NED is designed to be a more general paradigm by loosening the restrictive design decisions of past settings (e.g. closed-world assumption) & imposing fewer restrictions on learning algorithms (e.g. predefined train & test phases). The learners can infer the experimental parameters themselves by optimizing for both accuracy & compute. In NED, a learner receives a stream of data & makes sequential predictions while choosing how to update itself, adapt to data from novel categories, & deal with changing data distributions; while optimizing the total amount of compute. We evaluate a large set of existing methods across several sub fields using NED & present surprising yet revealing findings about modern day techniques. For instance, prominent few shot methods break down in NED, achieving dramatic drops of over 40% accuracy relative to simple baselines; & the SOTA self-supervised methods Momentum Contrast obtains 35% lower accuracy than supervised pretraining on novel classes. We also show that a simple baseline outperforms existing methods on NED.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge