Improving Positive Unlabeled Learning: Practical AUL Estimation and New Training Method for Extremely Imbalanced Data Sets

Paper and Code

Apr 21, 2020

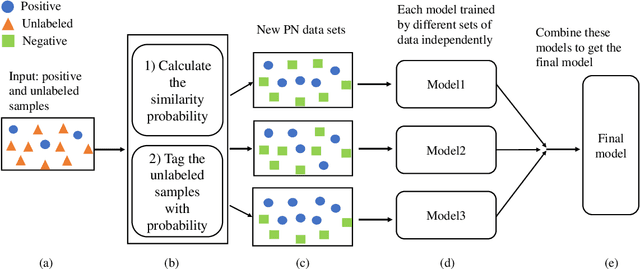

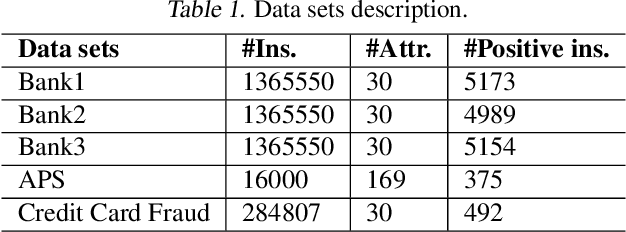

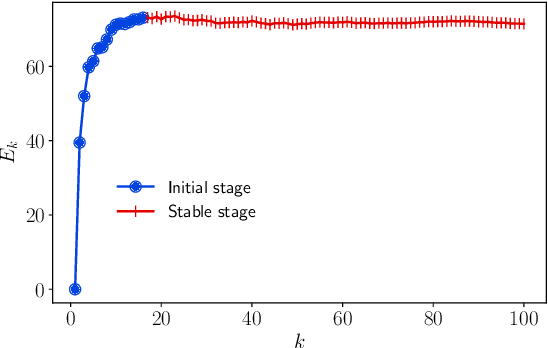

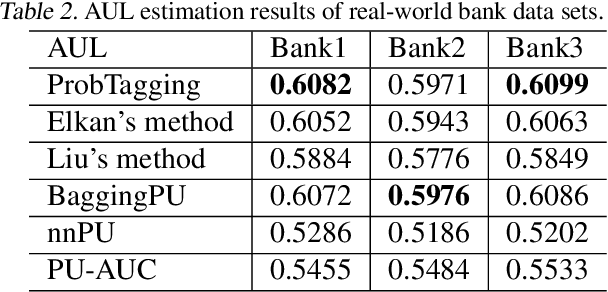

Positive Unlabeled (PU) learning is widely used in many applications, where a binary classifier is trained on the datasets consisting of only positive and unlabeled samples. In this paper, we improve PU learning over state-of-the-art from two aspects. Firstly, existing model evaluation methods for PU learning requires ground truth of unlabeled samples, which is unlikely to be obtained in practice. In order to release this restriction, we propose an asymptotic unbiased practical AUL (area under the lift) estimation method, which makes use of raw PU data without prior knowledge of unlabeled samples. Secondly, we propose ProbTagging, a new training method for extremely imbalanced data sets, where the number of unlabeled samples is hundreds or thousands of times that of positive samples. ProbTagging introduces probability into the aggregation method. Specifically, each unlabeled sample is tagged positive or negative with the probability calculated based on the similarity to its positive neighbors. Based on this, multiple data sets are generated to train different models, which are then combined into an ensemble model. Compared to state-of-the-art work, the experimental results show that ProbTagging can increase the AUC by up to 10%, based on three industrial and two artificial PU data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge