Improving Gender Translation Accuracy with Filtered Self-Training

Paper and Code

Apr 15, 2021

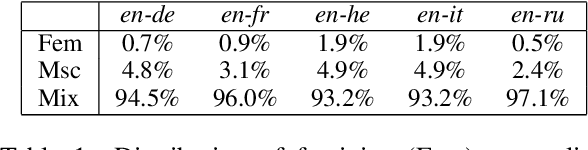

Targeted evaluations have found that machine translation systems often output incorrect gender, even when the gender is clear from context. Furthermore, these incorrectly gendered translations have the potential to reflect or amplify social biases. We propose a gender-filtered self-training technique to improve gender translation accuracy on unambiguously gendered inputs. This approach uses a source monolingual corpus and an initial model to generate gender-specific pseudo-parallel corpora which are then added to the training data. We filter the gender-specific corpora on the source and target sides to ensure that sentence pairs contain and correctly translate the specified gender. We evaluate our approach on translation from English into five languages, finding that our models improve gender translation accuracy without any cost to generic translation quality. In addition, we show the viability of our approach on several settings, including re-training from scratch, fine-tuning, controlling the balance of the training data, forward translation, and back-translation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge