Improving Adversarial Robustness via Attention and Adversarial Logit Pairing

Paper and Code

Aug 23, 2019

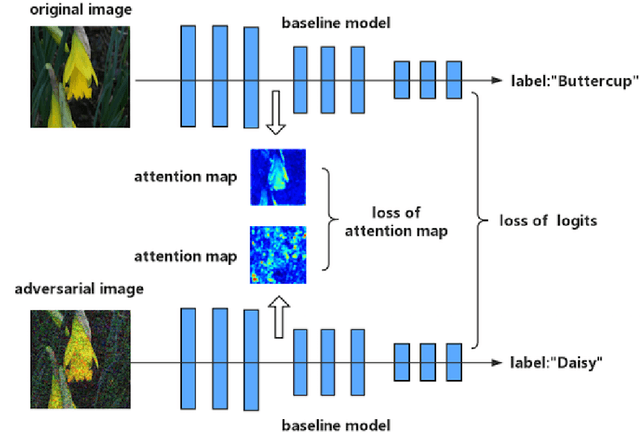

Though deep neural networks have achieved the state of the art performance in visual classification, recent studies have shown that they are all vulnerable to the attack of adversarial examples. In this paper, we develop improved techniques for defending against adversarial examples.First, we introduce enhanced defense using a technique we call \textbf{Attention and Adversarial Logit Pairing(AT+ALP)}, a method that encourages both attention map and logit for pairs of examples to be similar. When applied to clean examples and their adversarial counterparts, \textbf{AT+ALP} improves accuracy on adversarial examples over adversarial training.Next,We show that our \textbf{AT+ALP} can effectively increase the average activations of adversarial examples in the key area and demonstrate that it focuse on more discriminate features to improve the robustness of the model.Finally,we conducte extensive experiments using a wide range of datasets and the experiment results show that our \textbf{AT+ALP} achieves \textbf{the state of the art} defense.For example,on \textbf{17 Flower Category Database}, under strong 200-iteration \textbf{PGD} gray-box and black-box attacks where prior art has 34\% and 39\% accuracy, our method achieves \textbf{50\%} and \textbf{51\%}.Compared with previous work,our work is evaluated under highly challenging PGD attack:the maximum perturbation $\epsilon \in \{0.25,0.5\}$ i.e. $L_\infty \in \{0.25,0.5\}$ with 10 to 200 attack iterations.To our knowledge, such a strong attack has not been previously explored on a wide range of datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge