Importance Weighted Adversarial Variational Autoencoders for Spike Inference from Calcium Imaging Data

Paper and Code

Jun 07, 2019

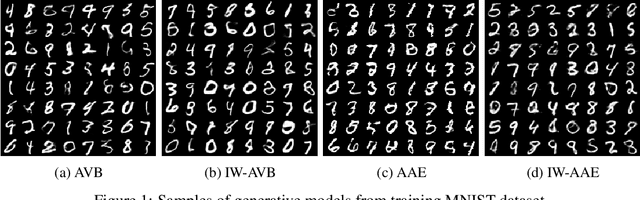

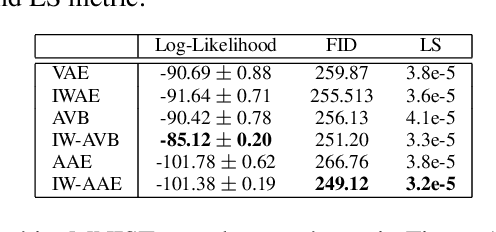

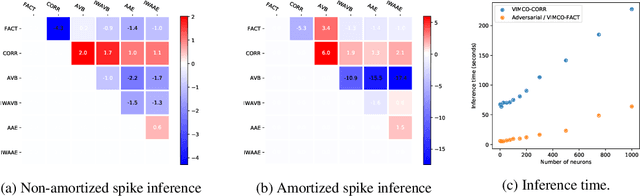

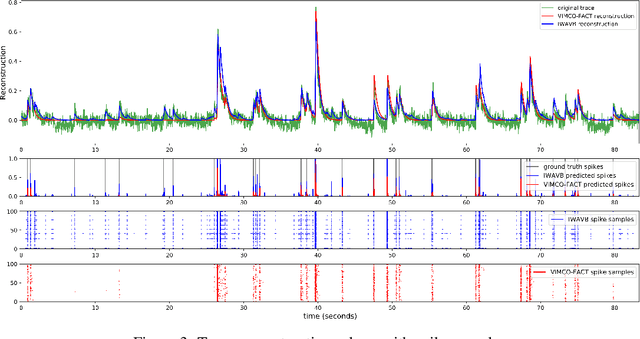

The Importance Weighted Auto Encoder (IWAE) objective has been shown to improve the training of generative models over the standard Variational Auto Encoder (VAE) objective. Here, we derive importance weighted extensions to AVB and AAE. These latent variable models use implicitly defined inference networks whose approximate posterior density q_\phi(z|x) cannot be directly evaluated, an essential ingredient for importance weighting. We show improved training and inference in latent variable models with our adversarially trained importance weighting method, and derive new theoretical connections between adversarial generative model training criteria and marginal likelihood based methods. We apply these methods to the important problem of inferring spiking neural activity from calcium imaging data, a challenging posterior inference problem in neuroscience, and show that posterior samples from the adversarial methods outperform factorized posteriors used in VAEs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge