Imitation Learning with Limited Actions via Diffusion Planners and Deep Koopman Controllers

Paper and Code

Oct 10, 2024

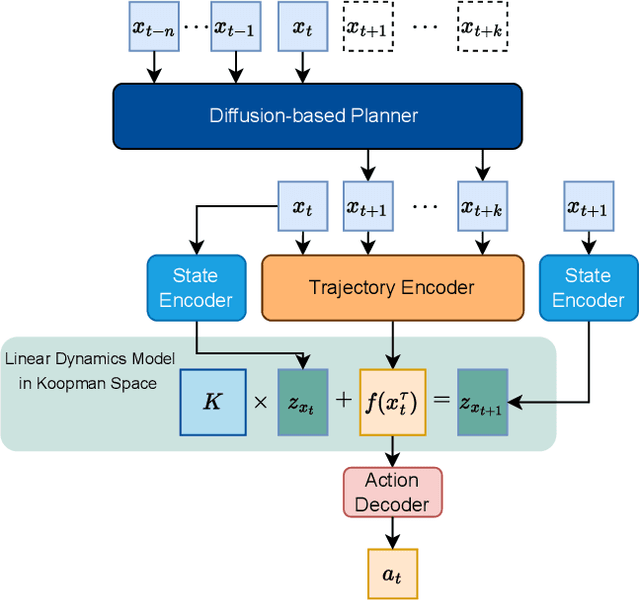

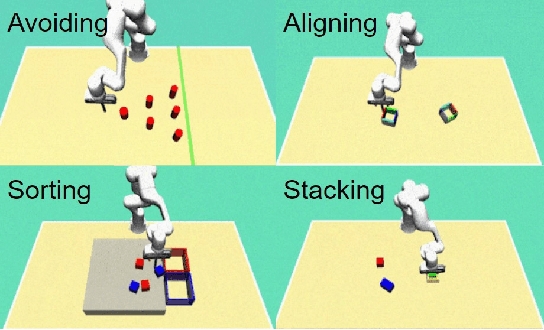

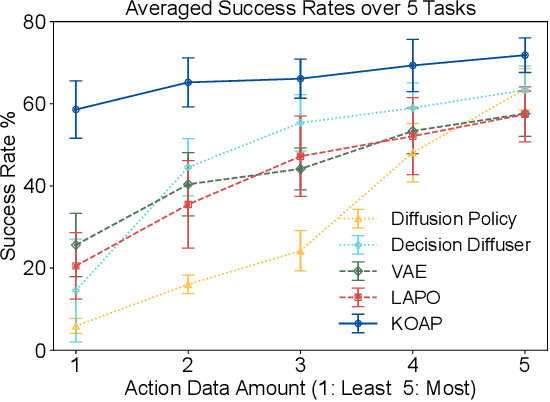

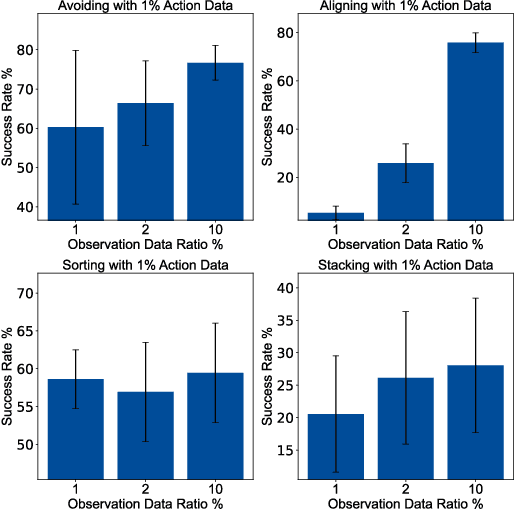

Recent advances in diffusion-based robot policies have demonstrated significant potential in imitating multi-modal behaviors. However, these approaches typically require large quantities of demonstration data paired with corresponding robot action labels, creating a substantial data collection burden. In this work, we propose a plan-then-control framework aimed at improving the action-data efficiency of inverse dynamics controllers by leveraging observational demonstration data. Specifically, we adopt a Deep Koopman Operator framework to model the dynamical system and utilize observation-only trajectories to learn a latent action representation. This latent representation can then be effectively mapped to real high-dimensional continuous actions using a linear action decoder, requiring minimal action-labeled data. Through experiments on simulated robot manipulation tasks and a real robot experiment with multi-modal expert demonstrations, we demonstrate that our approach significantly enhances action-data efficiency and achieves high task success rates with limited action data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge