Image Harmonization by Matching Regional References

Paper and Code

Apr 10, 2022

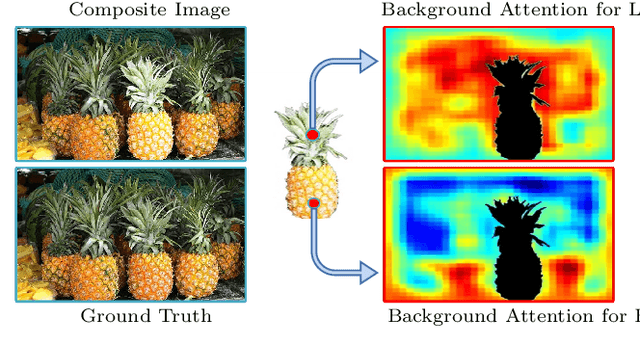

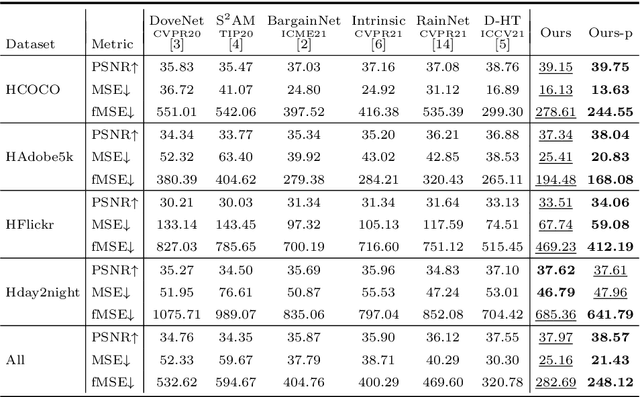

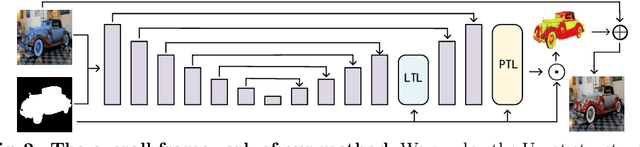

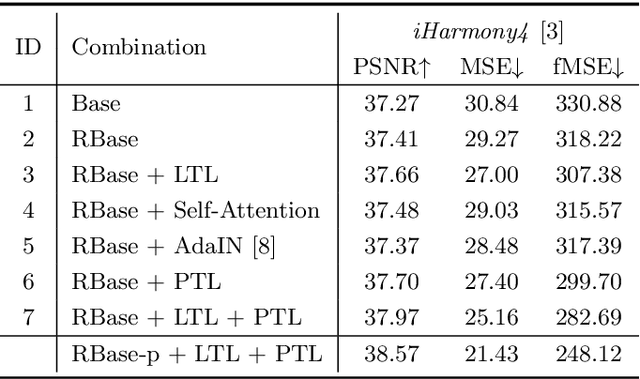

To achieve visual consistency in composite images, recent image harmonization methods typically summarize the appearance pattern of global background and apply it to the global foreground without location discrepancy. However, for a real image, the appearances (illumination, color temperature, saturation, hue, texture, etc) of different regions can vary significantly. So previous methods, which transfer the appearance globally, are not optimal. Trying to solve this issue, we firstly match the contents between the foreground and background and then adaptively adjust every foreground location according to the appearance of its content-related background regions. Further, we design a residual reconstruction strategy, that uses the predicted residual to adjust the appearance, and the composite foreground to reserve the image details. Extensive experiments demonstrate the effectiveness of our method. The source code will be available publicly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge