Image-based Natural Language Understanding Using 2D Convolutional Neural Networks

Paper and Code

Nov 06, 2018

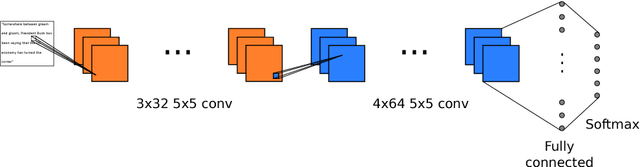

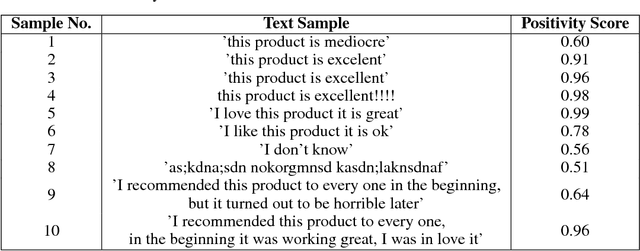

We propose a new approach to natural language understanding in which we consider the input text as an image and apply 2D Convolutional Neural Networks to learn the local and global semantics of the sentences from the variations ofthe visual patterns of words. Our approach demonstrates that it is possible to get semantically meaningful features from images with text without using optical character recognition and sequential processing pipelines, techniques that traditional Natural Language Understanding algorithms require. To validate our approach, we present results for two applications: text classification and dialog modeling. Using a 2D Convolutional Neural Network, we were able to outperform the state-of-art accuracy results of non-Latin alphabet-based text classification and achieved promising results for eight text classification datasets. Furthermore, our approach outperformed the memory networks when using out of vocabulary entities fromtask 4 of the bAbI dialog dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge