HyperNST: Hyper-Networks for Neural Style Transfer

Paper and Code

Aug 09, 2022

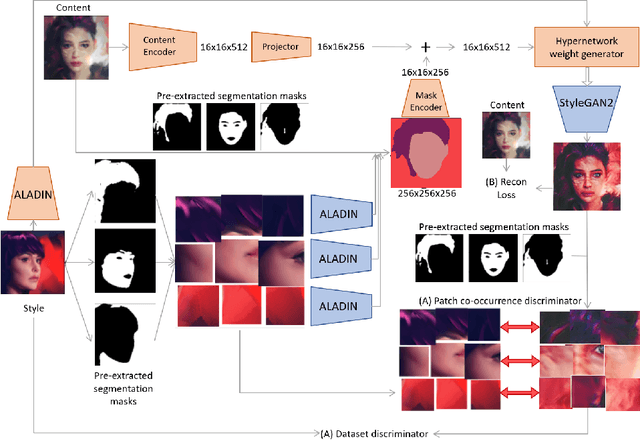

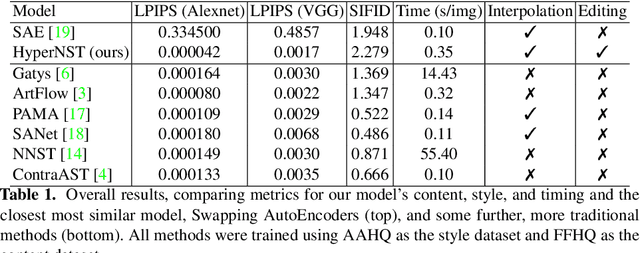

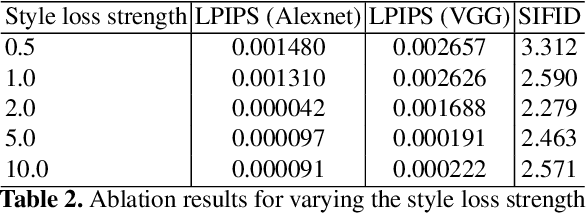

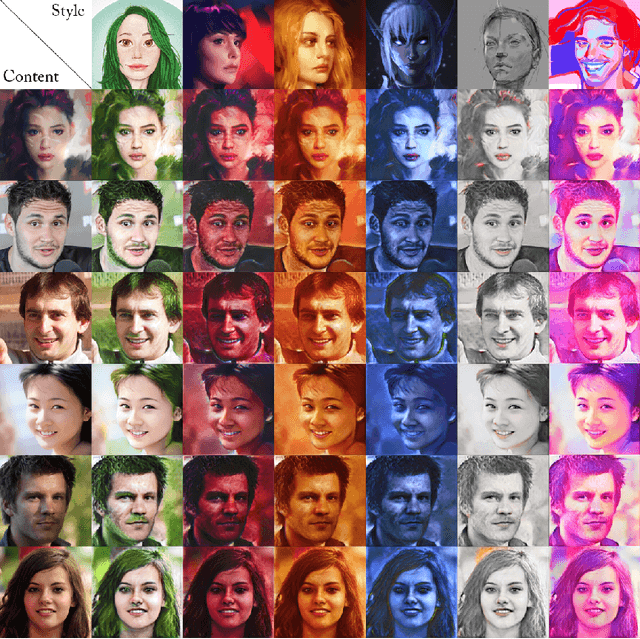

We present HyperNST; a neural style transfer (NST) technique for the artistic stylization of images, based on Hyper-networks and the StyleGAN2 architecture. Our contribution is a novel method for inducing style transfer parameterized by a metric space, pre-trained for style-based visual search (SBVS). We show for the first time that such space may be used to drive NST, enabling the application and interpolation of styles from an SBVS system. The technical contribution is a hyper-network that predicts weight updates to a StyleGAN2 pre-trained over a diverse gamut of artistic content (portraits), tailoring the style parameterization on a per-region basis using a semantic map of the facial regions. We show HyperNST to exceed state of the art in content preservation for our stylized content while retaining good style transfer performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge