Human Perception of Performance

Paper and Code

Dec 05, 2017

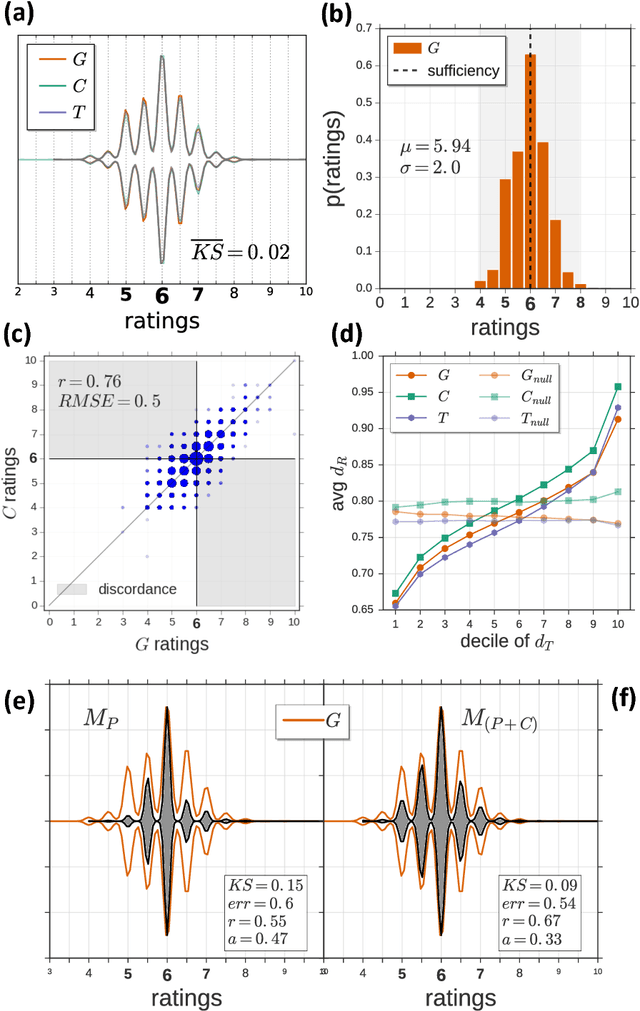

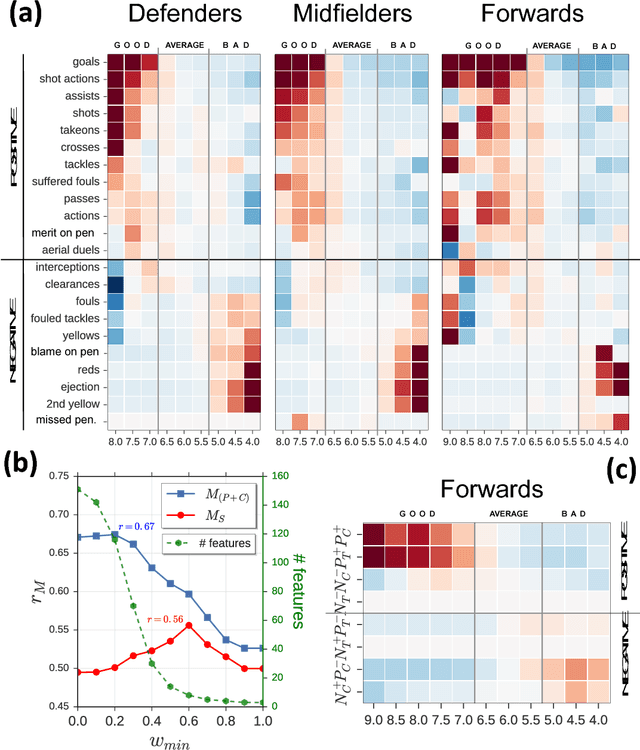

Humans are routinely asked to evaluate the performance of other individuals, separating success from failure and affecting outcomes from science to education and sports. Yet, in many contexts, the metrics driving the human evaluation process remain unclear. Here we analyse a massive dataset capturing players' evaluations by human judges to explore human perception of performance in soccer, the world's most popular sport. We use machine learning to design an artificial judge which accurately reproduces human evaluation, allowing us to demonstrate how human observers are biased towards diverse contextual features. By investigating the structure of the artificial judge, we uncover the aspects of the players' behavior which attract the attention of human judges, demonstrating that human evaluation is based on a noticeability heuristic where only feature values far from the norm are considered to rate an individual's performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge