Human Activity Recognition using Attribute-Based Neural Networks and Context Information

Paper and Code

Oct 28, 2021

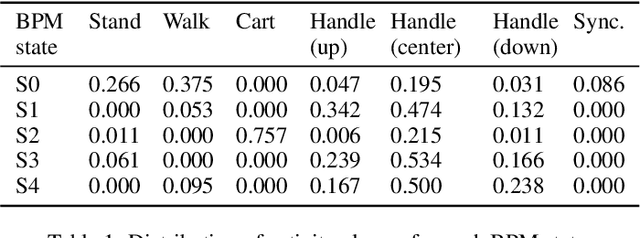

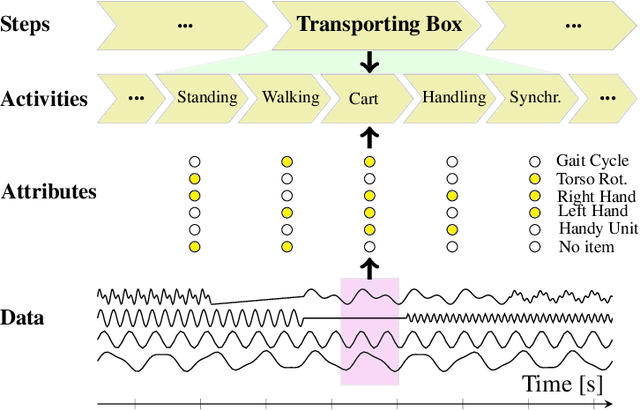

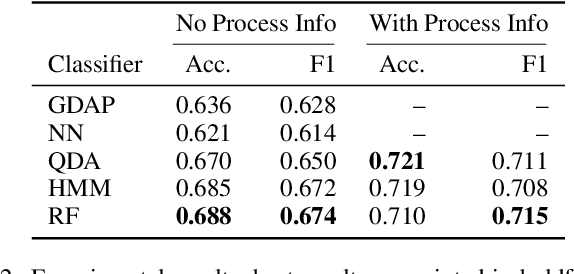

We consider human activity recognition (HAR) from wearable sensor data in manual-work processes, like warehouse order-picking. Such structured domains can often be partitioned into distinct process steps, e.g., packaging or transporting. Each process step can have a different prior distribution over activity classes, e.g., standing or walking, and different system dynamics. Here, we show how such context information can be integrated systematically into a deep neural network-based HAR system. Specifically, we propose a hybrid architecture that combines a deep neural network-that estimates high-level movement descriptors, attributes, from the raw-sensor data-and a shallow classifier, which predicts activity classes from the estimated attributes and (optional) context information, like the currently executed process step. We empirically show that our proposed architecture increases HAR performance, compared to state-of-the-art methods. Additionally, we show that HAR performance can be further increased when information about process steps is incorporated, even when that information is only partially correct.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge