How Sensitive are Sensitivity-Based Explanations?

Paper and Code

Jan 27, 2019

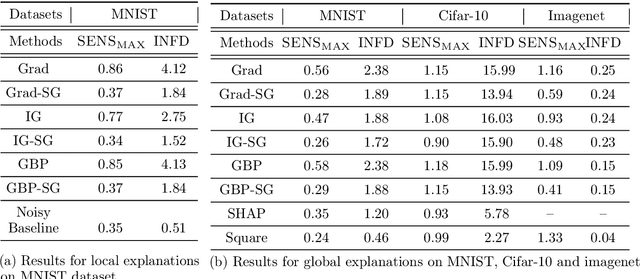

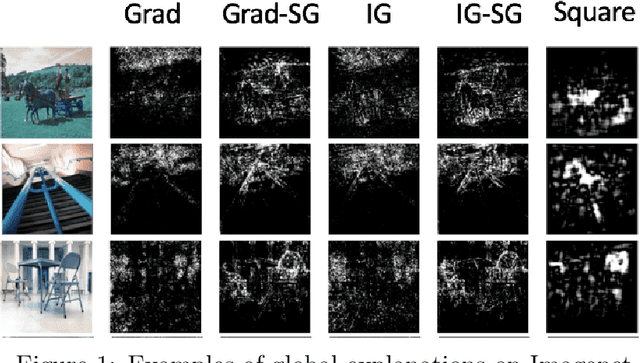

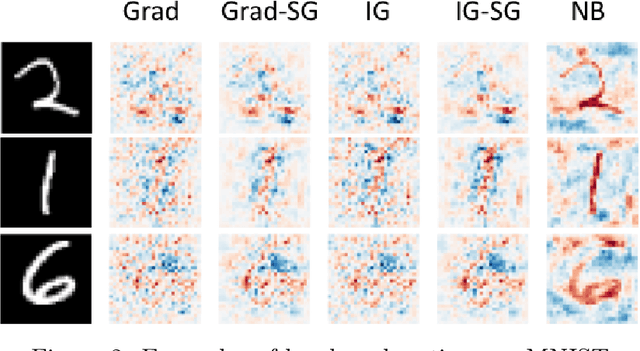

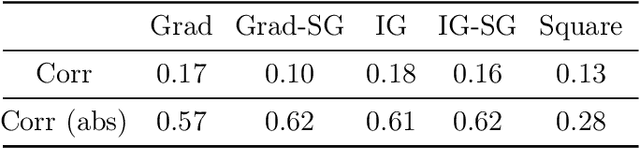

We propose a simple objective evaluation measure for explanations of a complex black-box machine learning model. While most such model explanations have largely been evaluated via qualitative measures, such as how humans might qualitatively perceive the explanations, it is vital to also consider objective measures such as the one we propose in this paper. Our evaluation measure that we naturally call sensitivity is simple: it characterizes how an explanation changes as we vary the test input, and depending on how we measure these changes, and how we vary the input, we arrive at different notions of sensitivity. We also provide a calculus for deriving sensitivity of complex explanations in terms of that for simpler explanations, which thus allows an easy computation of sensitivities for yet to be proposed explanations. One advantage of an objective evaluation measure is that we can optimize the explanation with respect to the measure: we show that (1) any given explanation can be simply modified to improve its sensitivity with just a modest deviation from the original explanation, and (2) gradient based explanations of an adversarially trained network are less sensitive. Perhaps surprisingly, our experiments show that explanations optimized to have lower sensitivity can be more faithful to the model predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge