How Do Recommendation Models Amplify Popularity Bias? An Analysis from the Spectral Perspective

Paper and Code

Apr 18, 2024

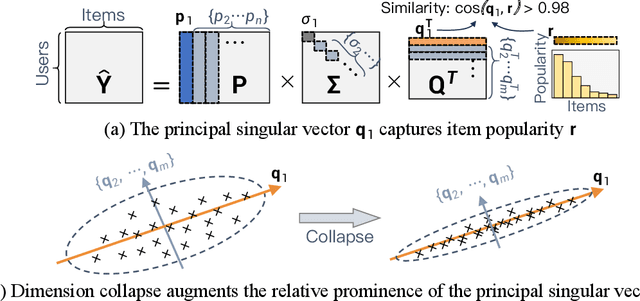

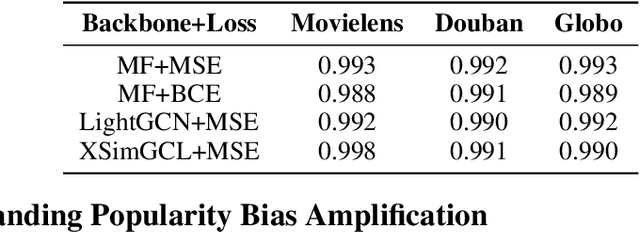

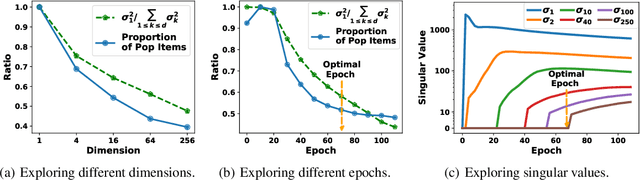

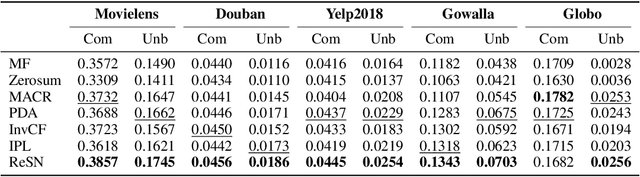

Recommendation Systems (RS) are often plagued by popularity bias. Specifically,when recommendation models are trained on long-tailed datasets, they not only inherit this bias but often exacerbate it. This effect undermines both the precision and fairness of RS and catalyzes the so-called Matthew Effect. Despite the widely recognition of this issue, the fundamental causes remain largely elusive. In our research, we delve deeply into popularity bias amplification. Our comprehensive theoretical and empirical investigations lead to two core insights: 1) Item popularity is memorized in the principal singular vector of the score matrix predicted by the recommendation model; 2) The dimension collapse phenomenon amplifies the impact of principal singular vector on model predictions, intensifying the popularity bias. Based on these insights, we propose a novel method to mitigate this bias by imposing penalties on the magnitude of the principal singular value. Considering the heavy computational burden in directly evaluating the gradient of the principal singular value, we develop an efficient algorithm that harnesses the inherent properties of the singular vector. Extensive experiments across seven real-world datasets and three testing scenarios have been conducted to validate the superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge