Hierarchical Attention Networks for Medical Image Segmentation

Paper and Code

Nov 25, 2019

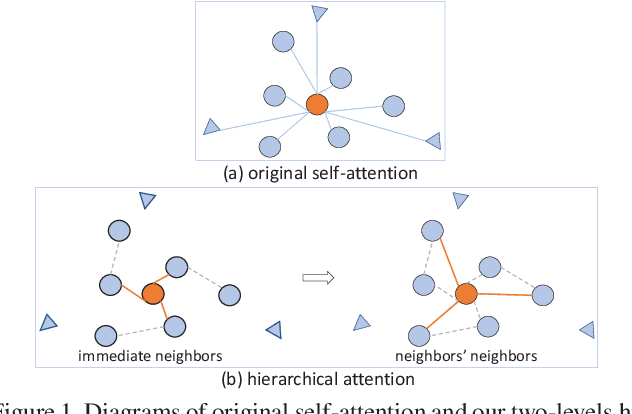

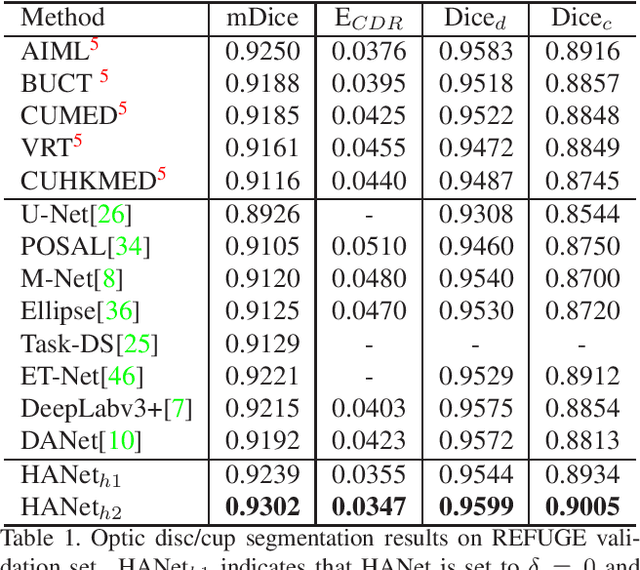

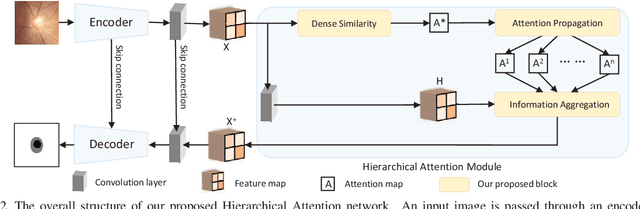

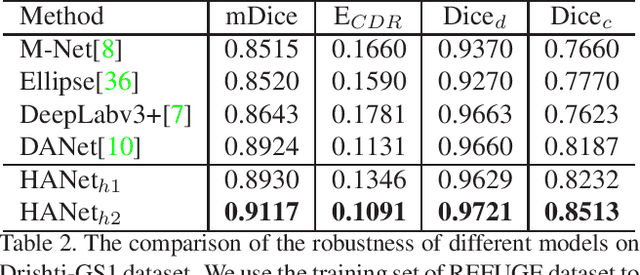

The medical image is characterized by the inter-class indistinction, high variability, and noise, where the recognition of pixels is challenging. Unlike previous self-attention based methods that capture context information from one level, we reformulate the self-attention mechanism from the view of the high-order graph and propose a novel method, namely Hierarchical Attention Network (HANet), to address the problem of medical image segmentation. Concretely, an HA module embedded in the HANet captures context information from neighbors of multiple levels, where these neighbors are extracted from the high-order graph. In the high-order graph, there will be an edge between two nodes only if the correlation between them is high enough, which naturally reduces the noisy attention information caused by the inter-class indistinction. The proposed HA module is robust to the variance of input and can be flexibly inserted into the existing convolution neural networks. We conduct experiments on three medical image segmentation tasks including optic disc/cup segmentation, blood vessel segmentation, and lung segmentation. Extensive results show our method is more effective and robust than the existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge