Hierarchial Reinforcement Learning in StarCraft II with Human Expertise in Subgoals Selection

Paper and Code

Aug 08, 2020

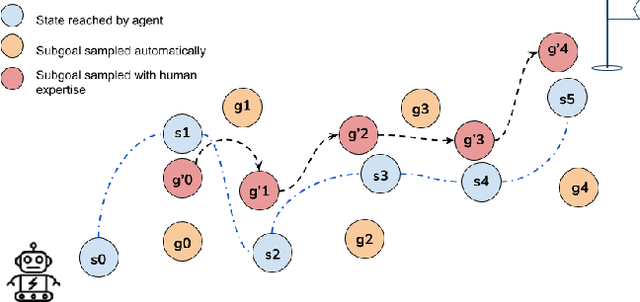

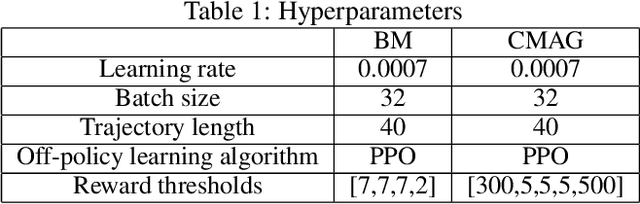

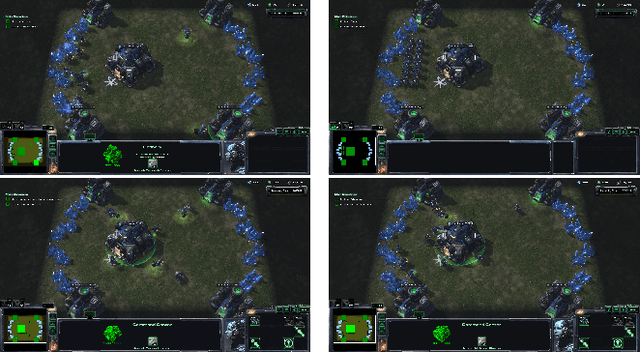

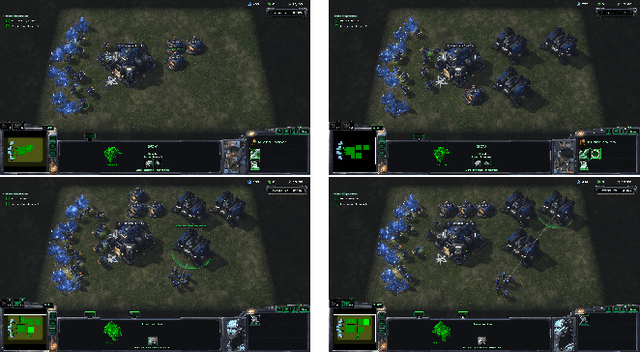

This work is inspired by recent advances in hierarchical reinforcement learning (HRL) (Barto and Mahadevan 2003;Hengst 2010), and improvements in learning efficiency with heuristic-based subgoal selection and hindsight experience replay (HER)(Andrychowicz et al. 2017; Levy et al. 2019). We propose a new method to integrate HRL, HER and effective subgoal selection based on human expertise to support sample-efficient learning and enhance interpretability of the agent's behavior. Human expertise remains indispensable in many areas such as medicine (Buch, Ahmed, and Maruthappu 2018) and law (Cath 2018), where interpretability, explainability and transparency are crucial in the decision making process, for ethical and legal reasons. Our method simplifies the complex task sets for achieving the overall objectives by decomposing into subgoals at different levels of abstraction. Incorporating relevant subjective knowledge also significantly reduces the computational resources spent in exploration for RL, especially in high speed, changing, and complex environments where the transition dynamics cannot be effectively learned and modelled in a short time. Experimental results in two StarCraft II (SC2) minigames demonstrate that our method can achieve better sample efficiency than flat and end-to-end RL methods, and provide an effective method for explaining the agent's performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge