Heterogeneous Network Representation Learning: Survey, Benchmark, Evaluation, and Beyond

Paper and Code

Apr 01, 2020

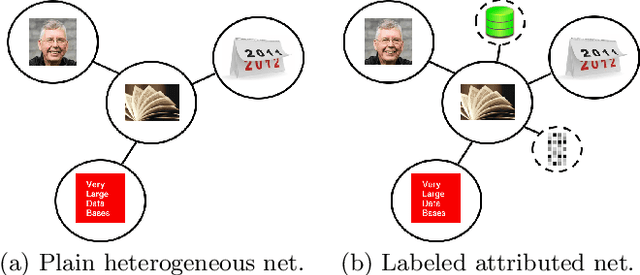

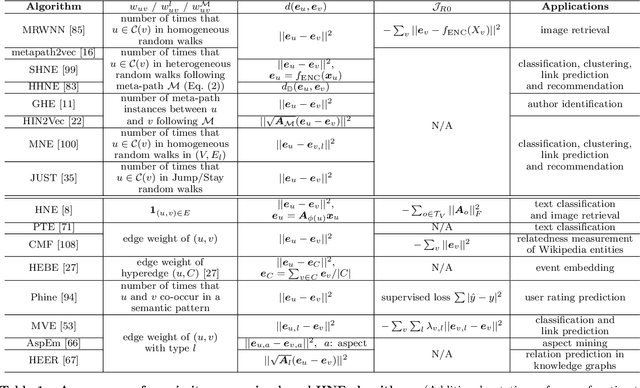

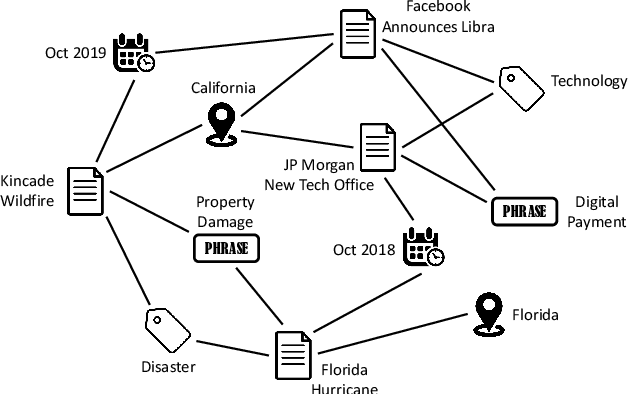

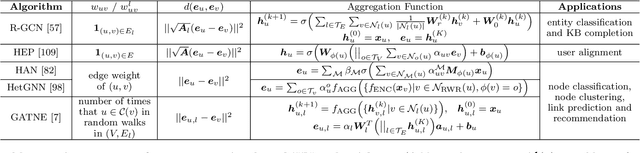

Since real-world objects and their interactions are often multi-modal and multi-typed, heterogeneous networks have been widely used as a more powerful, realistic, and generic superclass of traditional homogeneous networks (graphs). Meanwhile, representation learning (\aka~embedding) has recently been intensively studied and shown effective for various network mining and analytical tasks. Since there has already been a broad body of heterogeneous network embedding (HNE) algorithms but no dedicated survey, as the first contribution of this work, we pioneer in providing a unified paradigm for the systematic categorization and analysis over the merits of various existing HNE algorithms. Moreover, existing HNE algorithms, though mostly claimed generic, are often evaluated on different datasets. Understandable due to the natural application favor of HNE, such indirect comparisons largely hinder the proper attribution of improved task performance towards effective data preprocessing and novel technical design, especially considering the various ways possible to construct a heterogeneous network from real-world application data. Therefore, as the second contribution, we create four benchmark datasets with various properties regarding scale, structure, attribute/label availability, and \etc.~from different sources, towards the comprehensive evaluation of HNE algorithms. As the third contribution, we carefully refactor and amend the implementations of and create friendly interfaces for ten popular HNE algorithms, and provide all-around comparisons among them over multiple tasks and experimental settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge