Hardware Architecture for Large Parallel Array of Random Feature Extractors applied to Image Recognition

Paper and Code

Dec 24, 2015

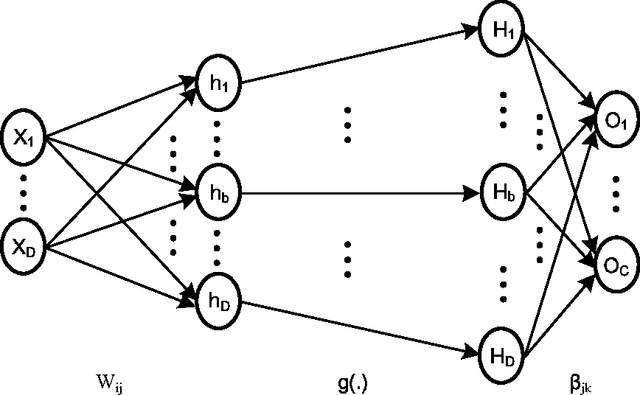

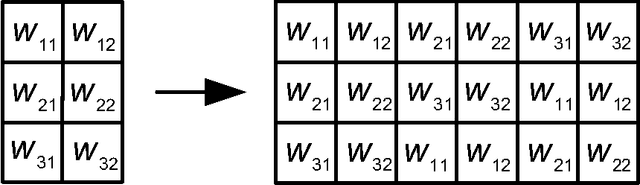

We demonstrate a low-power and compact hardware implementation of Random Feature Extractor (RFE) core. With complex tasks like Image Recognition requiring a large set of features, we show how weight reuse technique can allow to virtually expand the random features available from RFE core. Further, we show how to avoid computation cost wasted for propagating "incognizant" or redundant random features. For proof of concept, we validated our approach by using our RFE core as the first stage of Extreme Learning Machine (ELM)--a two layer neural network--and were able to achieve $>97\%$ accuracy on MNIST database of handwritten digits. ELM's first stage of RFE is done on an analog ASIC occupying $5$mm$\times5$mm area in $0.35\mu$m CMOS and consuming $5.95$ $\mu$J/classify while using $\approx 5000$ effective hidden neurons. The ELM second stage consisting of just adders can be implemented as digital circuit with estimated power consumption of $20.9$ nJ/classify. With a total energy consumption of only $5.97$ $\mu$J/classify, this low-power mixed signal ASIC can act as a co-processor in portable electronic gadgets with cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge