GuardNN: Secure DNN Accelerator for Privacy-Preserving Deep Learning

Paper and Code

Aug 26, 2020

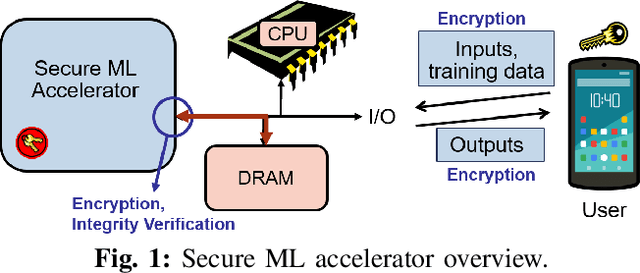

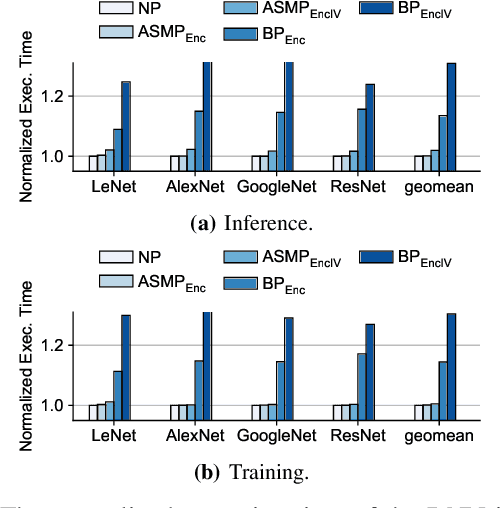

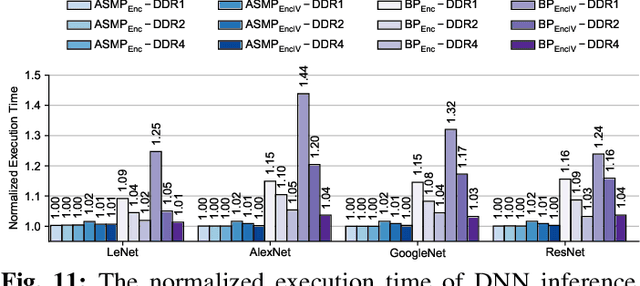

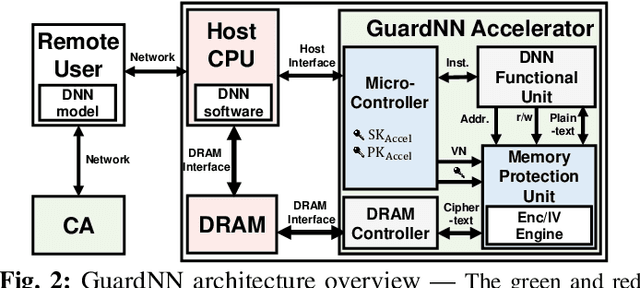

This paper proposes GuardNN, a secure deep neural network (DNN) accelerator, which provides strong hardware-based protection for user data and model parameters even in an untrusted environment. GuardNN shows that the architecture and protection can be customized for a specific application to provide strong confidentiality and integrity protection with negligible overhead. The design of the GuardNN instruction set reduces the TCB to just the accelerator and enables confidentiality protection without the overhead of integrity protection. GuardNN also introduces a new application-specific memory protection scheme to minimize the overhead of memory encryption and integrity verification. The scheme shows that most of the off-chip meta-data in today's state-of-the-art memory protection can be removed by exploiting the known memory access patterns of a DNN accelerator. GuardNN is implemented as an FPGA prototype, which demonstrates effective protection with less than 2% performance overhead for inference over a variety of modern DNN models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge