Grouped sparse projection

Paper and Code

Dec 11, 2019

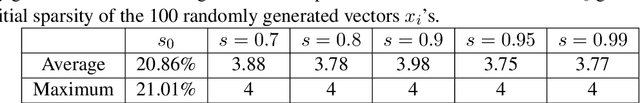

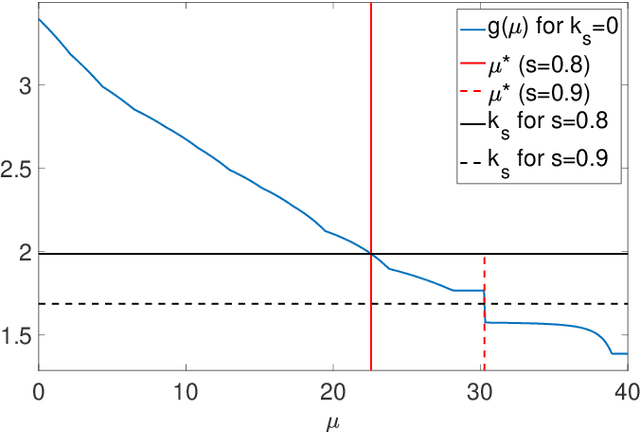

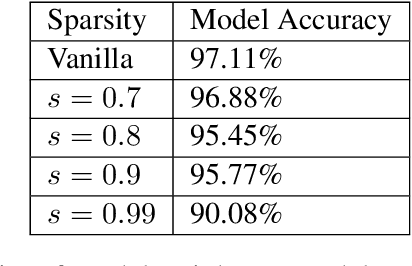

As evident from deep learning, very large models bring improvements in training dynamics and representation power. Yet, smaller models have benefits of energy efficiency and interpretability. To get the benefits from both ends of the spectrum we often encourage sparsity in the model. Unfortunately, most existing approaches do not have a controllable way to request a desired value of sparsity in an interpretable parameter. In this paper, we design a new sparse projection method for a set of vectors in order to achieve a desired average level of sparsity which is measured using the ratio of the $\ell_1$ and $\ell_2$ norms. Most existing methods project each vector individuality trying to achieve a target sparsity, hence the user has to choose a sparsity level for each vector (e.g., impose that all vectors have the same sparsity). Instead, we project all vectors together to achieve an average target sparsity, where the sparsity levels of the vectors is automatically tuned. We also propose a generalization of this projection using a new notion of weighted sparsity measured using the ratio of a weighted $\ell_1$ and the $\ell_2$ norms. These projections can be used in particular to sparsify the columns of a matrix, which we use to compute sparse nonnegative matrix factorization and to learn sparse deep networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge