GraphMixup: Improving Class-Imbalanced Node Classification on Graphs by Self-supervised Context Prediction

Paper and Code

Jun 21, 2021

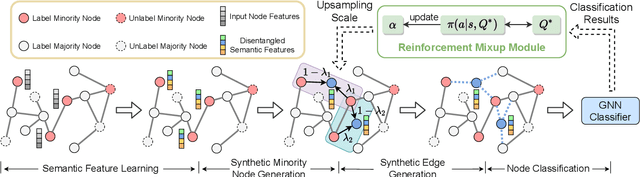

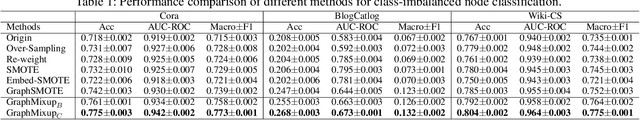

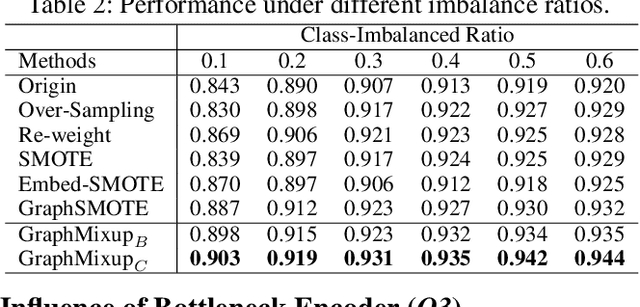

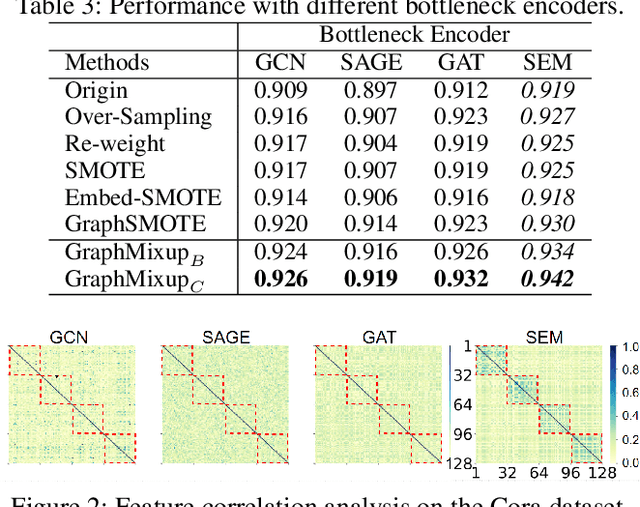

Recent years have witnessed great success in handling node classification tasks with Graph Neural Networks (GNNs). However, most existing GNNs are based on the assumption that node samples for different classes are balanced, while for many real-world graphs, there exists the problem of class imbalance, i.e., some classes may have much fewer samples than others. In this case, directly training a GNN classifier with raw data would under-represent samples from those minority classes and result in sub-optimal performance. This paper presents GraphMixup, a novel mixup-based framework for improving class-imbalanced node classification on graphs. However, directly performing mixup in the input space or embedding space may produce out-of-domain samples due to the extreme sparsity of minority classes; hence we construct semantic relation spaces that allows the Feature Mixup to be performed at the semantic level. Moreover, we apply two context-based self-supervised techniques to capture both local and global information in the graph structure and then propose Edge Mixup specifically for graph data. Finally, we develop a \emph{Reinforcement Mixup} mechanism to adaptively determine how many samples are to be generated by mixup for those minority classes. Extensive experiments on three real-world datasets show that GraphMixup yields truly encouraging results for class-imbalanced node classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge