Gradual Domain Adaptation via Self-Training of Auxiliary Models

Paper and Code

Jun 18, 2021

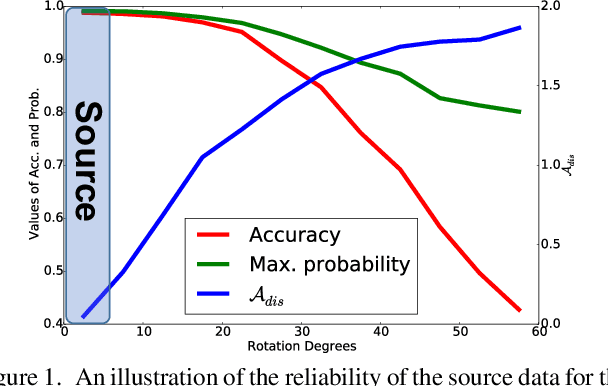

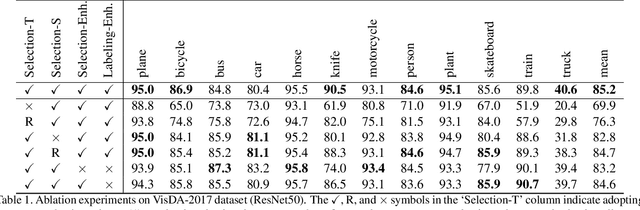

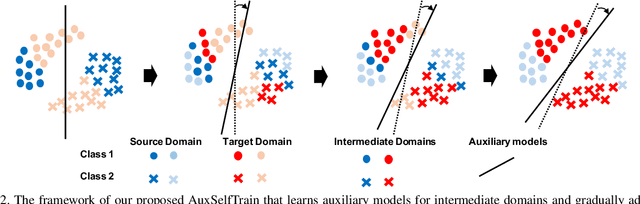

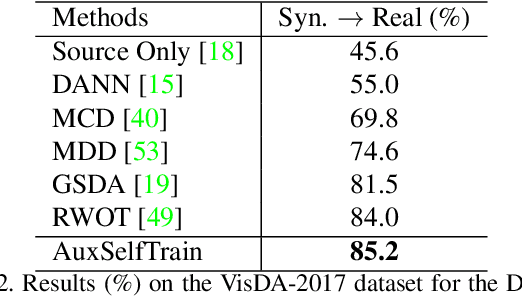

Domain adaptation becomes more challenging with increasing gaps between source and target domains. Motivated from an empirical analysis on the reliability of labeled source data for the use of distancing target domains, we propose self-training of auxiliary models (AuxSelfTrain) that learns models for intermediate domains and gradually combats the distancing shifts across domains. We introduce evolving intermediate domains as combinations of decreasing proportion of source data and increasing proportion of target data, which are sampled to minimize the domain distance between consecutive domains. Then the source model could be gradually adapted for the use in the target domain by self-training of auxiliary models on evolving intermediate domains. We also introduce an enhanced indicator for sample selection via implicit ensemble and extend the proposed method to semi-supervised domain adaptation. Experiments on benchmark datasets of unsupervised and semi-supervised domain adaptation verify its efficacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge