Gradients should stay on Path: Better Estimators of the Reverse- and Forward KL Divergence for Normalizing Flows

Paper and Code

Jul 17, 2022

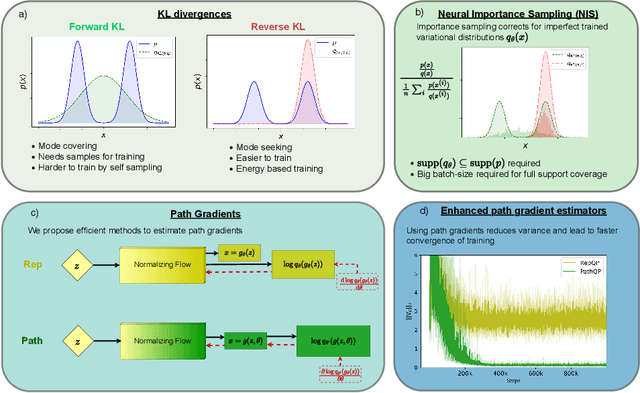

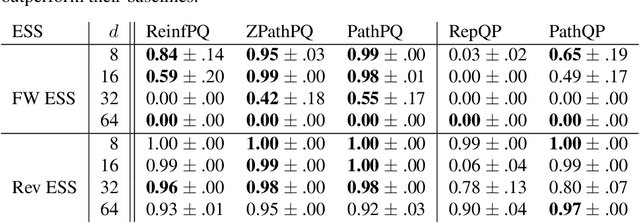

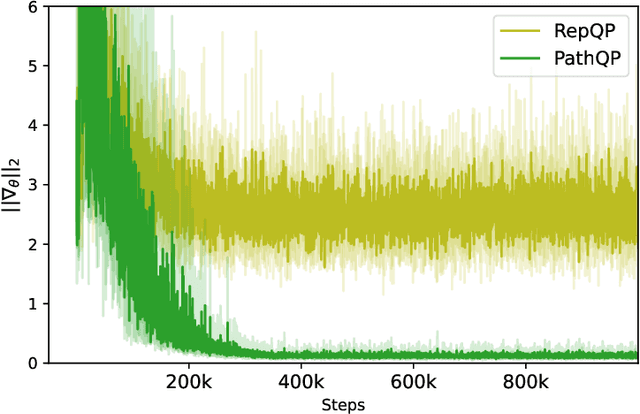

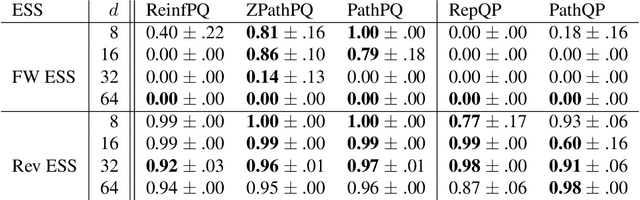

We propose an algorithm to estimate the path-gradient of both the reverse and forward Kullback-Leibler divergence for an arbitrary manifestly invertible normalizing flow. The resulting path-gradient estimators are straightforward to implement, have lower variance, and lead not only to faster convergence of training but also to better overall approximation results compared to standard total gradient estimators. We also demonstrate that path-gradient training is less susceptible to mode-collapse. In light of our results, we expect that path-gradient estimators will become the new standard method to train normalizing flows for variational inference.

* 29 pages, 8 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge