Global Capacity Measures for Deep ReLU Networks via Path Sampling

Paper and Code

Oct 22, 2019

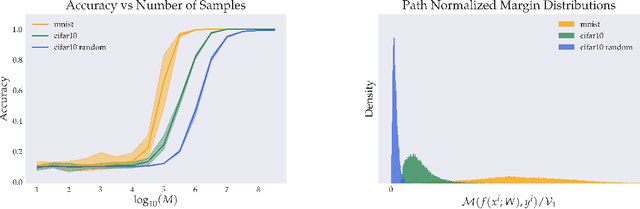

Classical results on the statistical complexity of linear models have commonly identified the norm of the weights $\|w\|$ as a fundamental capacity measure. Generalizations of this measure to the setting of deep networks have been varied, though a frequently identified quantity is the product of weight norms of each layer. In this work, we show that for a large class of networks possessing a positive homogeneity property, similar bounds may be obtained instead in terms of the norm of the product of weights. Our proof technique generalizes a recently proposed sampling argument, which allows us to demonstrate the existence of sparse approximants of positive homogeneous networks. This yields covering number bounds, which can be converted to generalization bounds for multi-class classification that are comparable to, and in certain cases improve upon, existing results in the literature. Finally, we investigate our sampling procedure empirically, which yields results consistent with our theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge