Geographic Adaptation of Pretrained Language Models

Paper and Code

Mar 16, 2022

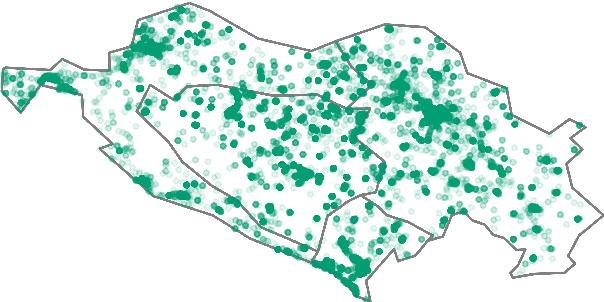

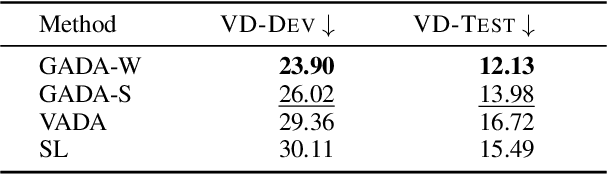

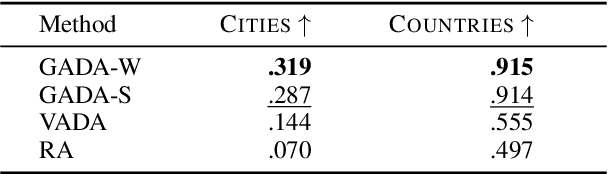

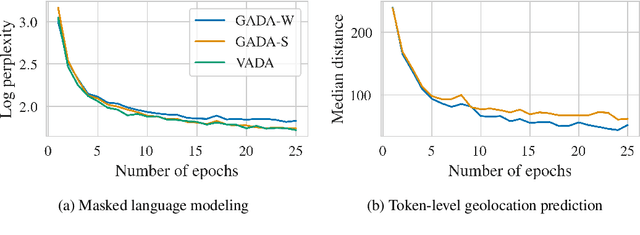

Geographic linguistic features are commonly used to improve the performance of pretrained language models (PLMs) on NLP tasks where geographic knowledge is intuitively beneficial (e.g., geolocation prediction and dialect feature prediction). Existing work, however, leverages such geographic information in task-specific fine-tuning, failing to incorporate it into PLMs' geo-linguistic knowledge, which would make it transferable across different tasks. In this work, we introduce an approach to task-agnostic geoadaptation of PLMs that forces the PLM to learn associations between linguistic phenomena and geographic locations. More specifically, geoadaptation is an intermediate training step that couples masked language modeling and geolocation prediction in a dynamic multitask learning setup. In our experiments, we geoadapt BERTi\'c -- a PLM for Bosnian, Croatian, Montenegrin, and Serbian (BCMS) -- using a corpus of geotagged BCMS tweets. Evaluation on three different tasks, namely unsupervised (zero-shot) and supervised geolocation prediction and (unsupervised) prediction of dialect features, shows that our geoadaptation approach is very effective: e.g., we obtain new state-of-the-art performance in supervised geolocation prediction and report massive gains over geographically uninformed PLMs on zero-shot geolocation prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge