GENESIS: Generative Scene Inference and Sampling with Object-Centric Latent Representations

Paper and Code

Jul 30, 2019

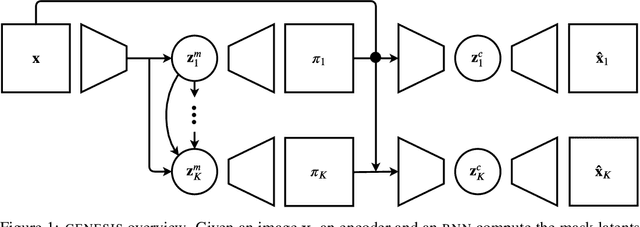

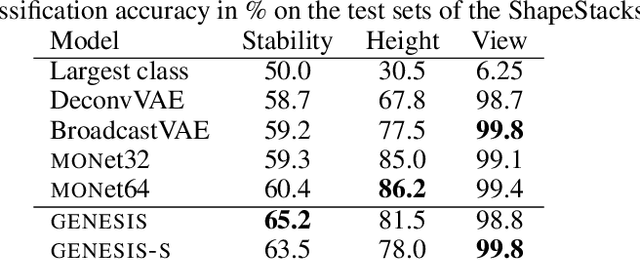

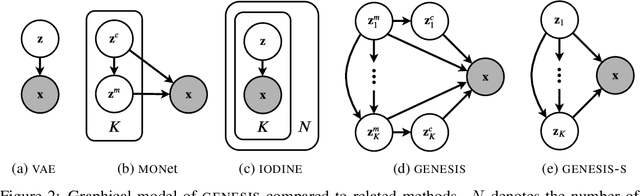

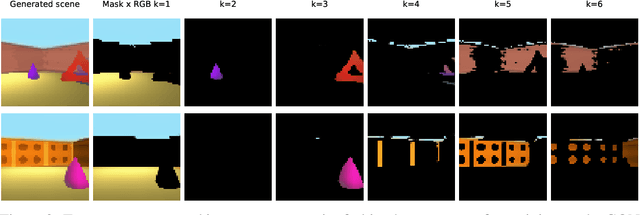

Generative models are emerging as promising tools in robotics and reinforcement learning. Yet, even though tasks in these domains typically involve distinct objects, most state-of-the-art methods do not explicitly capture the compositional nature of visual scenes. Two exceptions, MONet and IODINE, decompose scenes into objects in an unsupervised fashion via a set of latent variables. Their underlying generative processes, however, do not account for component interactions. Hence, neither of them allows for principled sampling of coherent scenes. Here we present GENESIS, the first object-centric generative model of visual scenes capable of both decomposing and generating complete scenes by explicitly capturing relationships between scene components. GENESIS parameterises a spatial GMM over pixels which is encoded by component-wise latent variables that are inferred sequentially or sampled from an autoregressive prior. We train GENESIS on two publicly available datasets and probe the information in the latent representations through a set of classification tasks, outperforming several baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge