Generating gapless land surface temperature with a high spatio-temporal resolution by fusing multi-source satellite-observed and model-simulated data

Paper and Code

Nov 29, 2021

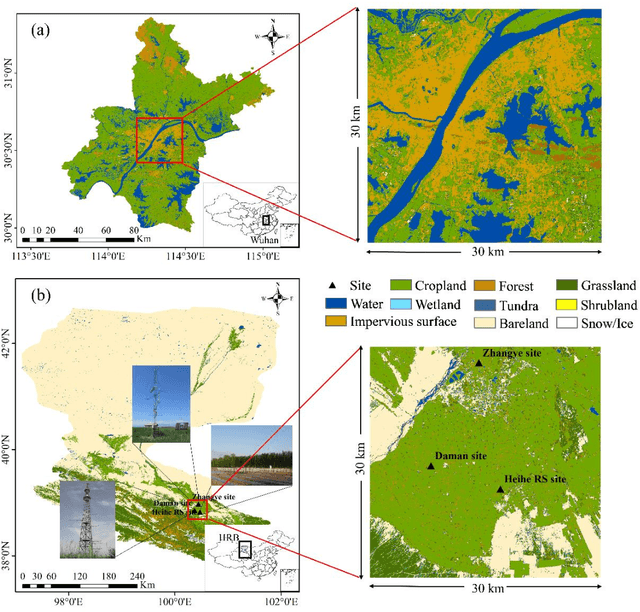

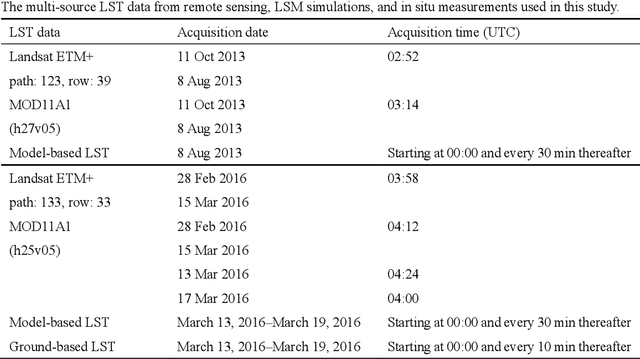

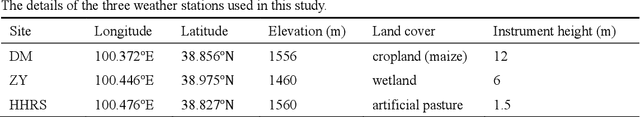

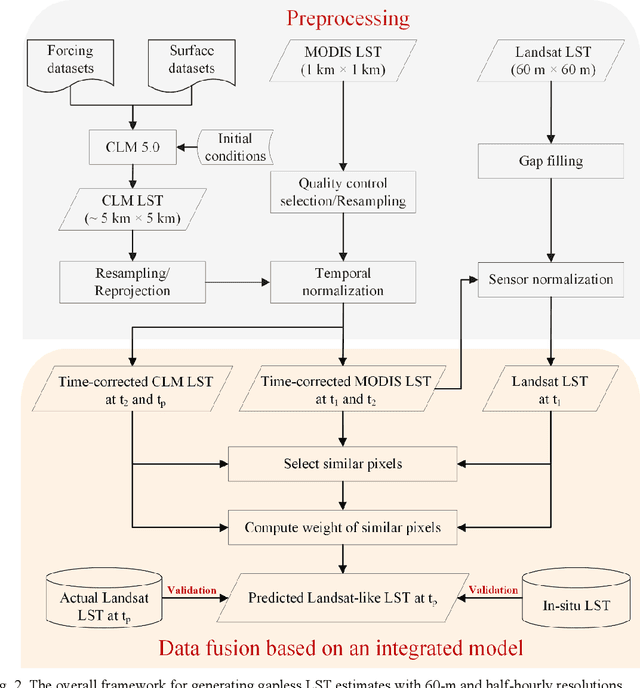

Land surface temperature (LST) is a key parameter when monitoring land surface processes. However, cloud contamination and the tradeoff between the spatial and temporal resolutions greatly impede the access to high-quality thermal infrared (TIR) remote sensing data. Despite the massive efforts made to solve these dilemmas, it is still difficult to generate LST estimates with concurrent spatial completeness and a high spatio-temporal resolution. Land surface models (LSMs) can be used to simulate gapless LST with a high temporal resolution, but this usually comes with a low spatial resolution. In this paper, we present an integrated temperature fusion framework for satellite-observed and LSM-simulated LST data to map gapless LST at a 60-m spatial resolution and half-hourly temporal resolution. The global linear model (GloLM) model and the diurnal land surface temperature cycle (DTC) model are respectively performed as preprocessing steps for sensor and temporal normalization between the different LST data. The Landsat LST, Moderate Resolution Imaging Spectroradiometer (MODIS) LST, and Community Land Model Version 5.0 (CLM 5.0)-simulated LST are then fused using a filter-based spatio-temporal integrated fusion model. Evaluations were implemented in an urban-dominated region (the city of Wuhan in China) and a natural-dominated region (the Heihe River Basin in China), in terms of accuracy, spatial variability, and diurnal temporal dynamics. Results indicate that the fused LST is highly consistent with actual Landsat LST data (in situ LST measurements), in terms of a Pearson correlation coefficient of 0.94 (0.97-0.99), a mean absolute error of 0.71-0.98 K (0.82-3.17 K), and a root-mean-square error of 0.97-1.26 K (1.09-3.97 K).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge