Generalizing to any diverse distribution: uniformity, gentle finetuning and rebalancing

Paper and Code

Oct 08, 2024

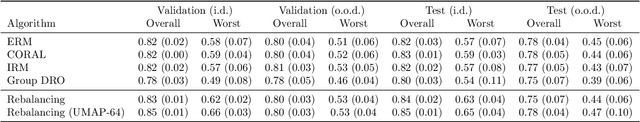

As training datasets grow larger, we aspire to develop models that generalize well to any diverse test distribution, even if the latter deviates significantly from the training data. Various approaches like domain adaptation, domain generalization, and robust optimization attempt to address the out-of-distribution challenge by posing assumptions about the relation between training and test distribution. Differently, we adopt a more conservative perspective by accounting for the worst-case error across all sufficiently diverse test distributions within a known domain. Our first finding is that training on a uniform distribution over this domain is optimal. We also interrogate practical remedies when uniform samples are unavailable by considering methods for mitigating non-uniformity through finetuning and rebalancing. Our theory provides a mathematical grounding for previous observations on the role of entropy and rebalancing for o.o.d. generalization and foundation model training. We also provide new empirical evidence across tasks involving o.o.d. shifts which illustrate the broad applicability of our perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge