Generalizing Energy-based Generative ConvNets from Particle Evolution Perspective

Paper and Code

Oct 31, 2019

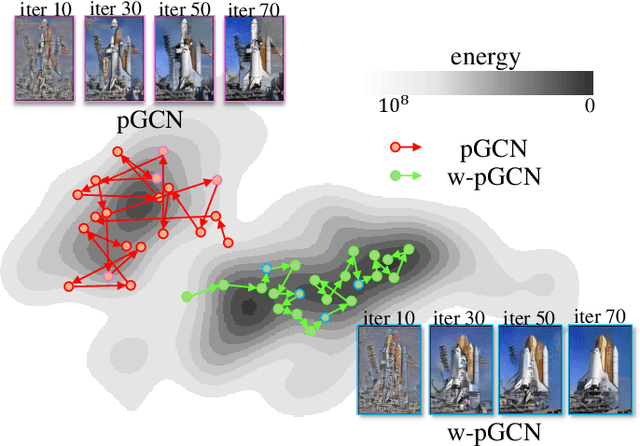

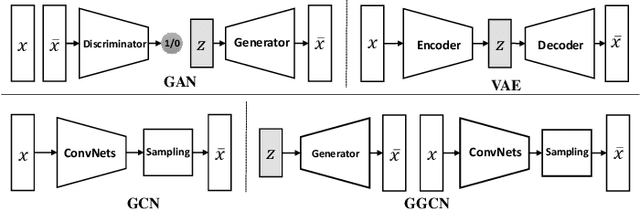

Compared with Generative Adversarial Networks (GAN), the Energy-Based generative Model (EBM) possesses two appealing properties: i) it can be directly optimized without requiring an auxiliary network during the learning and synthesizing; ii) it can better approximate underlying distribution of the observed data by learning explicitly potential functions. This paper studies a branch of EBMs, i.e., the energy-based Generative ConvNet (GCN), which minimizes its energy function defined by a bottom-up ConvNet. From the perspective of particle physics, we solve the problem of unstable energy dissipation that might damage the quality of the synthesized samples during the maximum likelihood learning. Specifically, we establish a connection between FRAME model [1] and dynamic physics process and provide a generalized formulation of FRAME in discrete flow with a certain metric measure from particle perspective. To address KL-vanishing issue, we generalize the reformulated GCN from the KL discrete flow with KL divergence measure to a Jordan-Kinderleher-Otto (JKO) discrete flow with Wasserastein distance metric and derive a Wasserastein GCN (w-GCN). To further minimize the learning bias and improve the model generalization, we present a Generalized GCN (GGCN). GGCN introduces a hidden space mapping strategy and employs a normal distribution as hidden space for the reference distribution. Besides, it applies a matching trainable non-linear upsampling function for further generalization. Considering the limitation of the efficiency problem in MCMC based learning of EBMs, an amortized learning are also proposed to improve the learning efficiency. Quantitative and qualitative experiments are conducted on several widely-used face and natural image datasets. Our experimental results surpass those of the existing models in both model stability and the quality of generated samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge