Flower: A Friendly Federated Learning Research Framework

Paper and Code

Jul 28, 2020

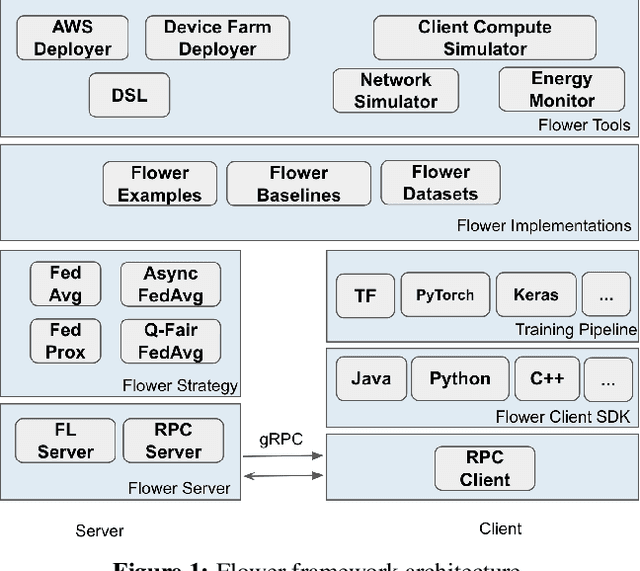

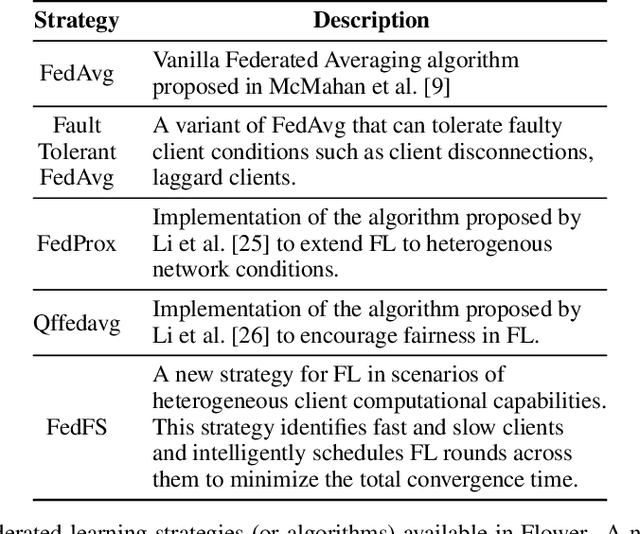

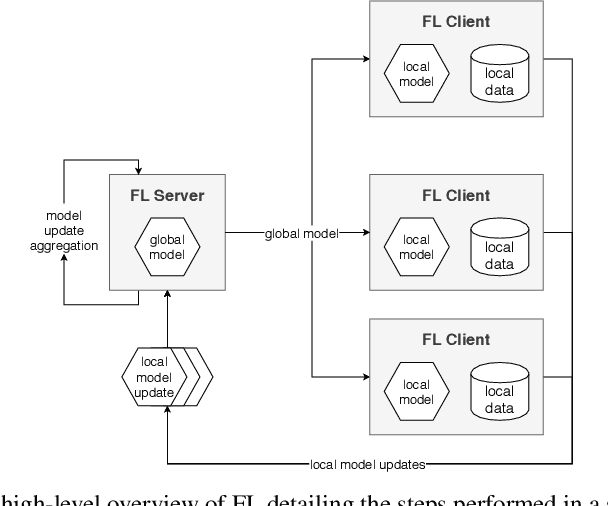

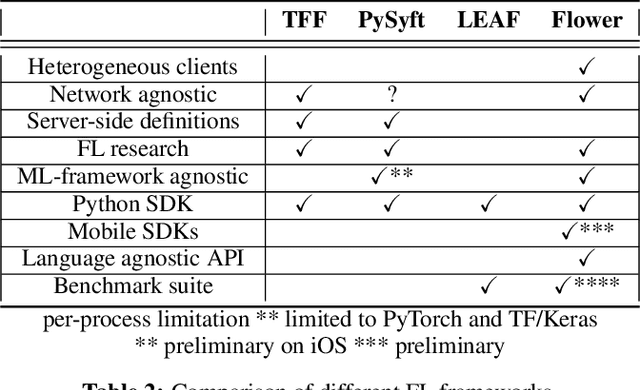

Federated Learning (FL) has emerged as a promising technique for edge devices to collaboratively learn a shared prediction model, while keeping their training data on the device, thereby decoupling the ability to do machine learning from the need to store the data in the cloud. However, FL is difficult to implement and deploy in practice, considering the heterogeneity in mobile devices, e.g., different programming languages, frameworks, and hardware accelerators. Although there are a few frameworks available to simulate FL algorithms (e.g., TensorFlow Federated), they do not support implementing FL workloads on mobile devices. Furthermore, these frameworks are designed to simulate FL in a server environment and hence do not allow experimentation in distributed mobile settings for a large number of clients. In this paper, we present Flower (https://flower.dev/), a FL framework which is both agnostic towards heterogeneous client environments and also scales to a large number of clients, including mobile and embedded devices. Flower's abstractions let developers port existing mobile workloads with little overhead, regardless of the programming language or ML framework used, while also allowing researchers flexibility to experiment with novel approaches to advance the state-of-the-art. We describe the design goals and implementation considerations of Flower and show our experiences in evaluating the performance of FL across clients with heterogeneous computational and communication capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge