Flare-Aware Cross-modal Enhancement Network for Multi-spectral Vehicle Re-identification

Paper and Code

May 23, 2023

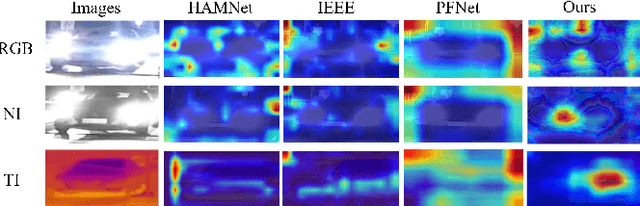

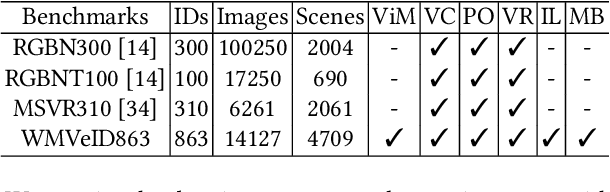

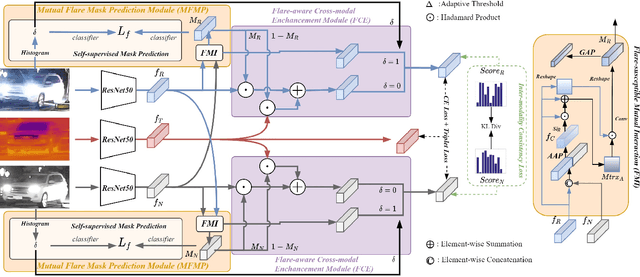

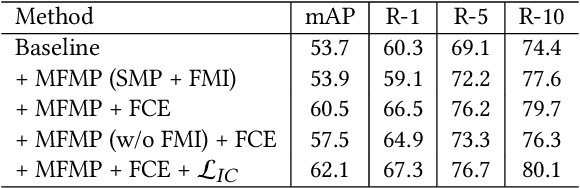

Multi-spectral vehicle re-identification aims to address the challenge of identifying vehicles in complex lighting conditions by incorporating complementary visible and infrared information. However, in harsh environments, the discriminative cues in RGB and NIR modalities are often lost due to strong flares from vehicle lamps or sunlight, and existing multi-modal fusion methods are limited in their ability to recover these important cues. To address this problem, we propose a Flare-Aware Cross-modal Enhancement Network that adaptively restores flare-corrupted RGB and NIR features with guidance from the flare-immunized thermal infrared spectrum. First, to reduce the influence of locally degraded appearance due to intense flare, we propose a Mutual Flare Mask Prediction module to jointly obtain flare-corrupted masks in RGB and NIR modalities in a self-supervised manner. Second, to use the flare-immunized TI information to enhance the masked RGB and NIR, we propose a Flare-Aware Cross-modal Enhancement module that adaptively guides feature extraction of masked RGB and NIR spectra with prior flare-immunized knowledge from the TI spectrum. Third, to extract common informative semantic information from RGB and NIR, we propose an Inter-modality Consistency loss that enforces semantic consistency between the two modalities. Finally, to evaluate the proposed FACENet in handling intense flare, we introduce a new multi-spectral vehicle re-ID dataset, called WMVEID863, with additional challenges such as motion blur, significant background changes, and particularly intense flare degradation. Comprehensive experiments on both the newly collected dataset and public benchmark multi-spectral vehicle re-ID datasets demonstrate the superior performance of the proposed FACENet compared to state-of-the-art methods, especially in handling strong flares. The code and dataset will be released soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge