FedEval: A Benchmark System with a Comprehensive Evaluation Model for Federated Learning

Paper and Code

Nov 25, 2020

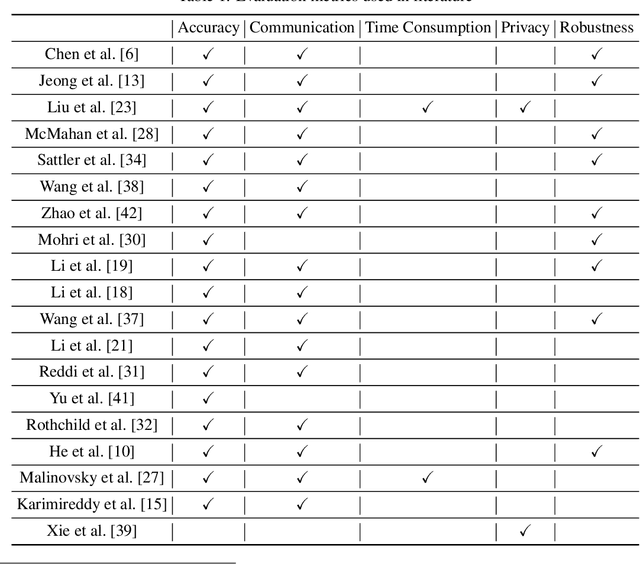

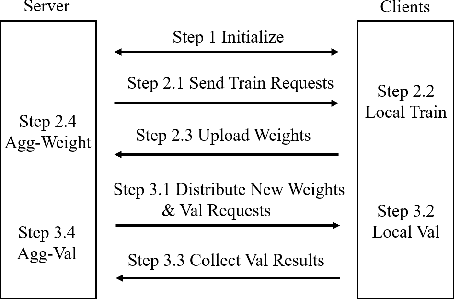

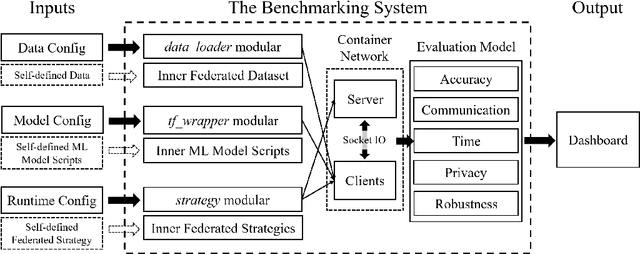

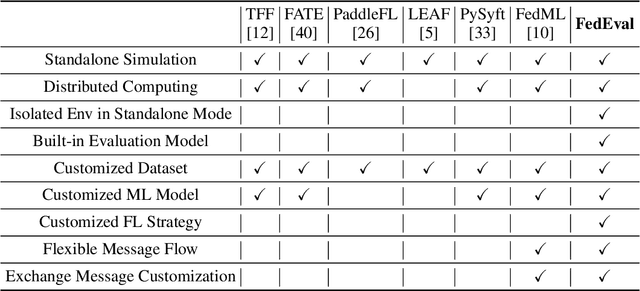

As an innovative solution for privacy-preserving machine learning (ML), federated learning (FL) is attracting much attention from research and industry areas. While new technologies proposed in the past few years do evolve the FL area, unfortunately, the evaluation results presented in these works fall short in integrity and are hardly comparable because of the inconsistent evaluation metrics and the lack of a common platform. In this paper, we propose a comprehensive evaluation framework for FL systems. Specifically, we first introduce the ACTPR model, which defines five metrics that cannot be excluded in FL evaluation, including Accuracy, Communication, Time efficiency, Privacy, and Robustness. Then we design and implement a benchmarking system called FedEval, which enables the systematic evaluation and comparison of existing works under consistent experimental conditions. We then provide an in-depth benchmarking study between the two most widely-used FL mechanisms, FedSGD and FedAvg. The benchmarking results show that FedSGD and FedAvg both have advantages and disadvantages under the ACTPR model. For example, FedSGD is barely influenced by the none independent and identically distributed (non-IID) data problem, but FedAvg suffers from a decline in accuracy of up to 9% in our experiments. On the other hand, FedAvg is more efficient than FedSGD regarding time consumption and communication. Lastly, we excavate a set of take-away conclusions, which are very helpful for researchers in the FL area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge