Feature Sharing and Integration for Cooperative Cognition and Perception with Volumetric Sensors

Paper and Code

Dec 04, 2020

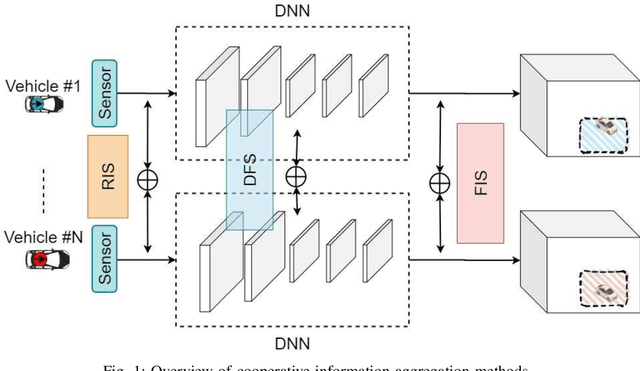

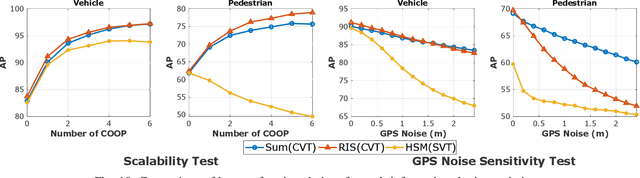

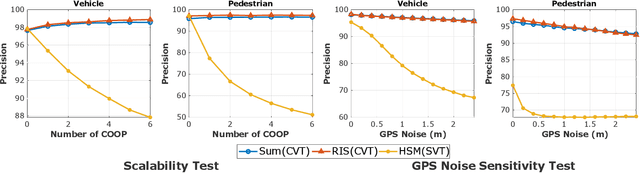

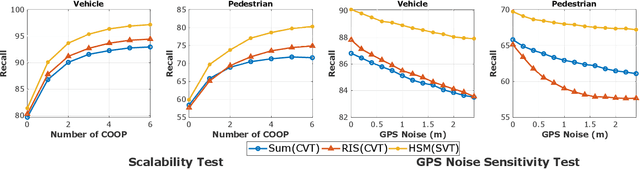

The recent advancement in computational and communication systems has led to the introduction of high-performing neural networks and high-speed wireless vehicular communication networks. As a result, new technologies such as cooperative perception and cognition have emerged, addressing the inherent limitations of sensory devices by providing solutions for the detection of partially occluded targets and expanding the sensing range. However, designing a reliable cooperative cognition or perception system requires addressing the challenges caused by limited network resources and discrepancies between the data shared by different sources. In this paper, we examine the requirements, limitations, and performance of different cooperative perception techniques, and present an in-depth analysis of the notion of Deep Feature Sharing (DFS). We explore different cooperative object detection designs and evaluate their performance in terms of average precision. We use the Volony dataset for our experimental study. The results confirm that the DFS methods are significantly less sensitive to the localization error caused by GPS noise. Furthermore, the results attest that detection gain of DFS methods caused by adding more cooperative participants in the scenes is comparable to raw information sharing technique while DFS enables flexibility in design toward satisfying communication requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge