Fast Online and Relational Tracking

Paper and Code

Aug 07, 2022

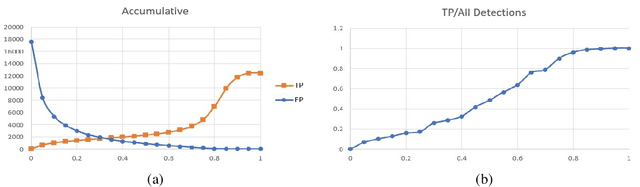

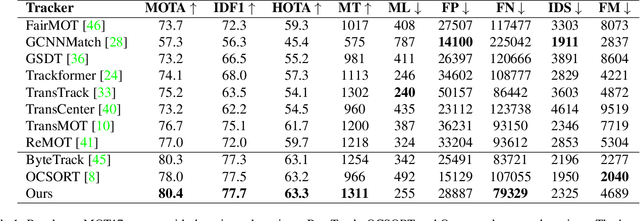

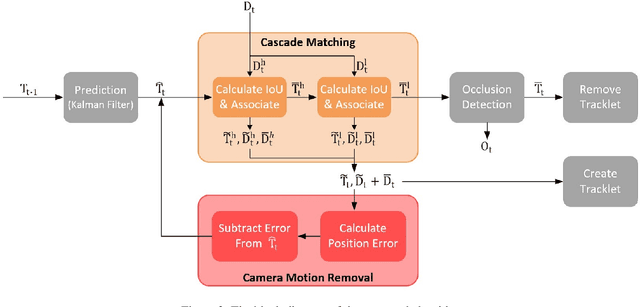

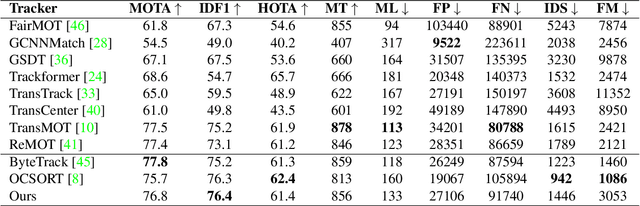

To overcome challenges in multiple object tracking task, recent algorithms use interaction cues alongside motion and appearance features. These algorithms use graph neural networks or transformers to extract interaction features that lead to high computation costs. In this paper, a novel interaction cue based on geometric features is presented aiming to detect occlusion and re-identify lost targets with low computational cost. Moreover, in most algorithms, camera motion is considered negligible, which is a strong assumption that is not always true and leads to ID Switch or mismatching of targets. In this paper, a method for measuring camera motion and removing its effect is presented that efficiently reduces the camera motion effect on tracking. The proposed algorithm is evaluated on MOT17 and MOT20 datasets and it achieves the state-of-the-art performance of MOT17 and comparable results on MOT20. The code is also publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge