Fast Active Monocular Distance Estimation from Time-to-Contact

Paper and Code

Mar 14, 2022

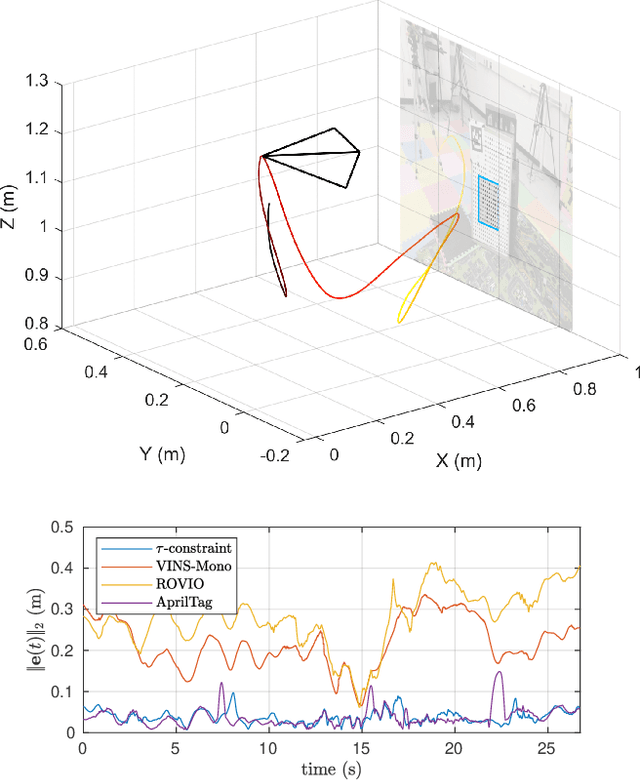

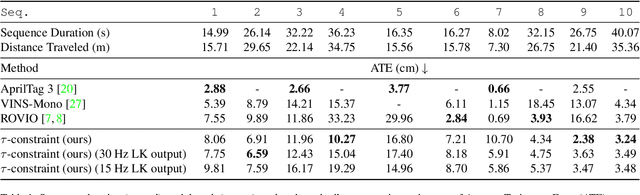

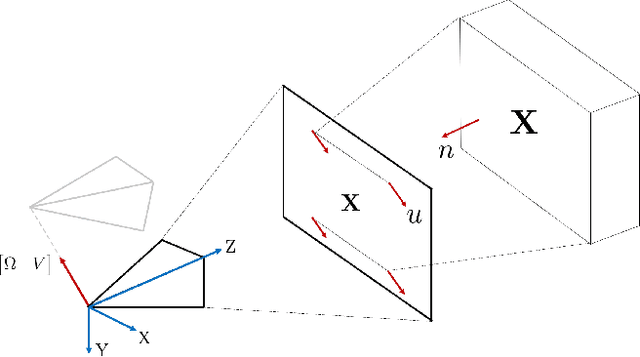

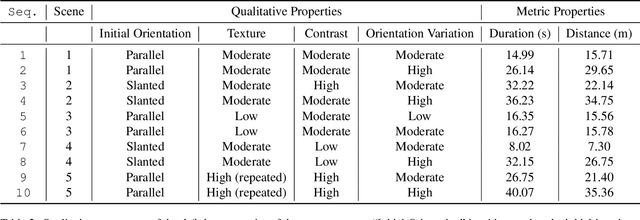

Distance estimation is fundamental for a variety of robotic applications including navigation, manipulation and planning. Inspired by the mammal's visual system, which gazes at specific objects (active fixation), and estimates when the object will reach it (time-to-contact), we develop a novel constraint between time-to-contact, acceleration, and distance that we call the $\tau$-constraint. It allows an active monocular camera to estimate depth using time-to-contact and inertial measurements (linear accelerations and angular velocities) within a window of time. Our work differs from other approaches by focusing on patches instead of feature points. This is, because the change in the patch area determines the time-to-contact directly. The result enables efficient estimation of distance while using only a small portion of the image, leading to a large speedup. We successfully validate the proposed $\tau$-constraint in the application of estimating camera position with a monocular grayscale camera and an Inertial Measurement Unit (IMU). Specifically, we test our method on different real-world planar objects over trajectories 8-40 seconds in duration and 7-35 meters long. Our method achieves 8.5 cm Average Trajectory Error (ATE) while the popular Visual-Inertial Odometry methods VINS-Mono and ROVIO achieve 12.2 and 16.9 cm ATE respectively. Additionally, our implementation runs 27$\times$ faster than VINS-Mono's and 6.8$\times$ faster than ROVIO's. We believe these results indicate the $\tau$-constraints potential to be the basis of robust, sophisticated algorithms for a multitude of applications involving an active camera and an IMU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge