Fairly Adaptive Negative Sampling for Recommendations

Paper and Code

Feb 16, 2023

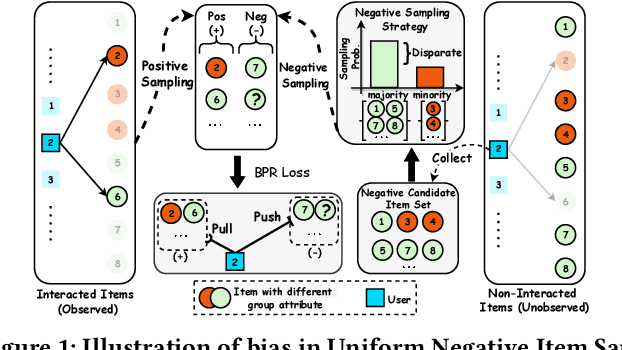

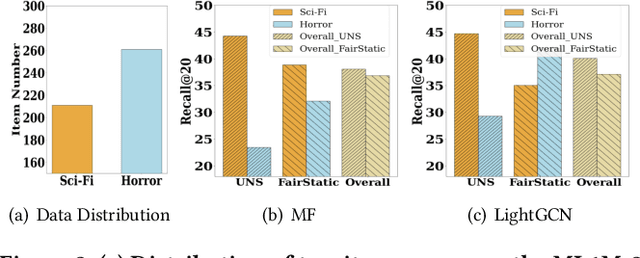

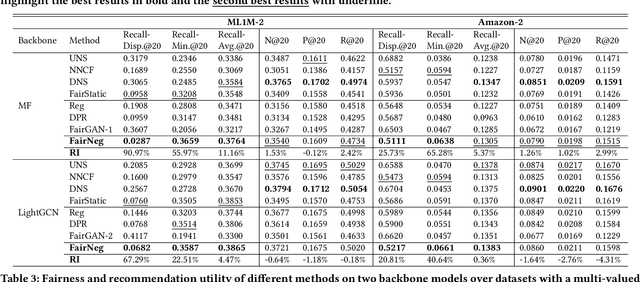

Pairwise learning strategies are prevalent for optimizing recommendation models on implicit feedback data, which usually learns user preference by discriminating between positive (i.e., clicked by a user) and negative items (i.e., obtained by negative sampling). However, the size of different item groups (specified by item attribute) is usually unevenly distributed. We empirically find that the commonly used uniform negative sampling strategy for pairwise algorithms (e.g., BPR) can inherit such data bias and oversample the majority item group as negative instances, severely countering group fairness on the item side. In this paper, we propose a Fairly adaptive Negative sampling approach (FairNeg), which improves item group fairness via adaptively adjusting the group-level negative sampling distribution in the training process. In particular, it first perceives the model's unfairness status at each step and then adjusts the group-wise sampling distribution with an adaptive momentum update strategy for better facilitating fairness optimization. Moreover, a negative sampling distribution Mixup mechanism is proposed, which gracefully incorporates existing importance-aware sampling techniques intended for mining informative negative samples, thus allowing for achieving multiple optimization purposes. Extensive experiments on four public datasets show our proposed method's superiority in group fairness enhancement and fairness-utility tradeoff.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge