Fairest of Them All: Establishing a Strong Baseline for Cross-Domain Person ReID

Paper and Code

Jul 28, 2019

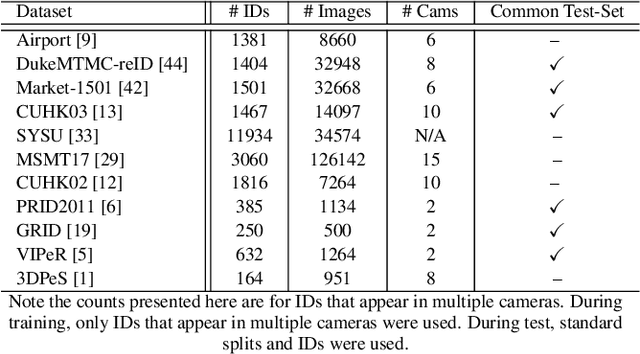

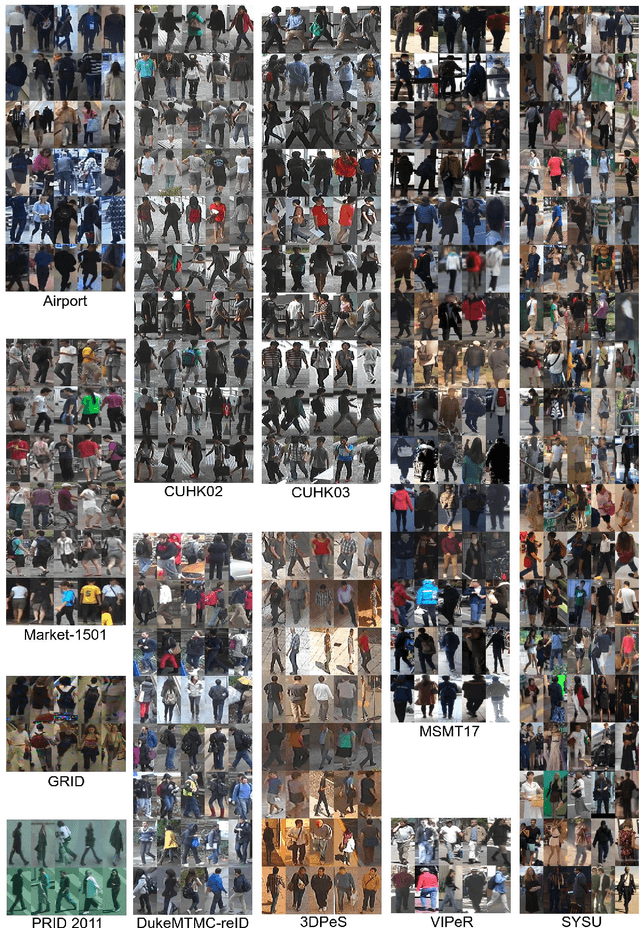

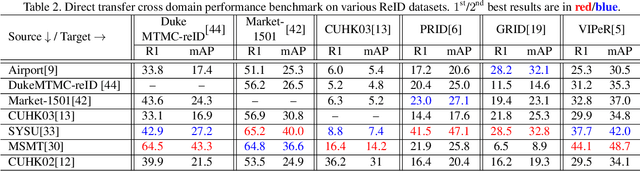

Person re-identification (ReID) remains a very difficult challenge in computer vision, and critical for large-scale video surveillance scenarios where an individual could appear in different camera views at different times. There has been recent interest in tackling this challenge using cross-domain approaches, which leverages data from source domains that are different than the target domain. Such approaches are more practical for real-world widespread deployment given that they don't require on-site training (as with unsupervised or domain transfer approaches) or on-site manual annotation and training (as with supervised approaches). In this study, we take a systematic approach to establishing a large baseline source domain and target domain for cross-domain person ReID. We accomplish this by conducting a comprehensive analysis to study the similarities between source domains proposed in literature, and studying the effects of incrementally increasing the size of the source domain. This allows us to establish a balanced source domain and target domain split that promotes variety in both source and target domains. Furthermore, using lessons learned from the state-of-the-art supervised person re-identification methods, we establish a strong baseline method for cross-domain person ReID. Experiments show that a source domain composed of two of the largest person ReID domains (SYSU and MSMT) performs well across six commonly-used target domains. Furthermore, we show that, surprisingly, two of the recent commonly-used domains (PRID and GRID) have too few query images to provide meaningful insights. As such, based on our findings, we propose the following balanced baseline for cross-domain person ReID consisting of: i) a fixed multi-source domain consisting of SYSU, MSMT, Airport and 3DPeS, and ii) a multi-target domain consisting of Market-1501, DukeMTMC-reID, CUHK03, PRID, GRID and VIPeR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge