F2DNet: Fast Focal Detection Network for Pedestrian Detection

Paper and Code

Mar 04, 2022

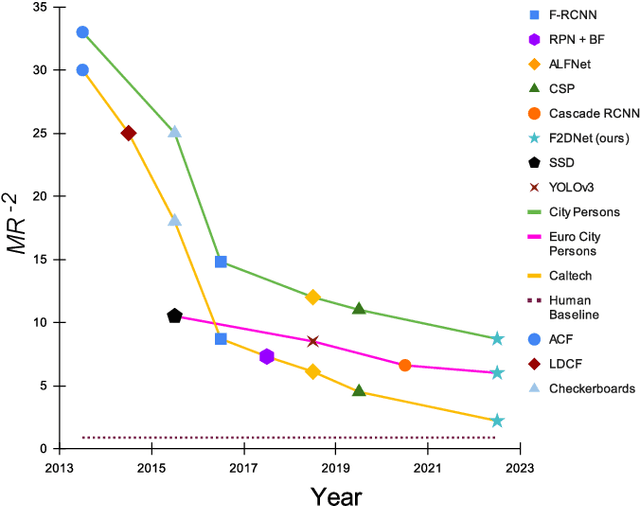

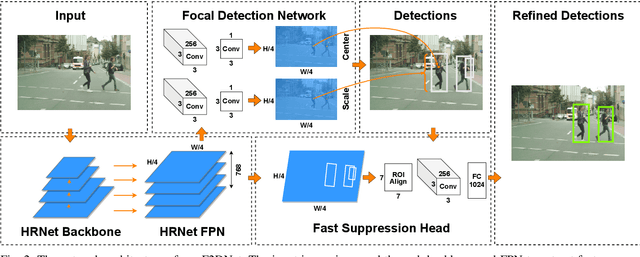

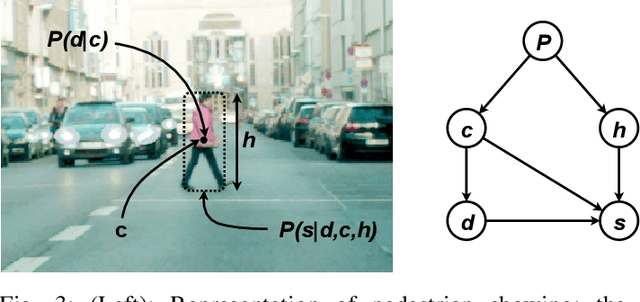

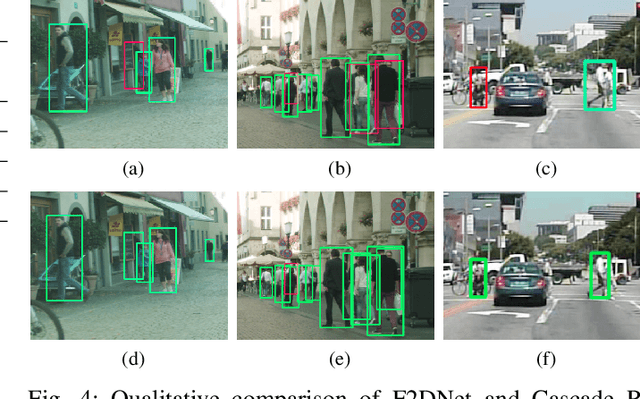

Two-stage detectors are state-of-the-art in object detection as well as pedestrian detection. However, the current two-stage detectors are inefficient as they do bounding box regression in multiple steps i.e. in region proposal networks and bounding box heads. Also, the anchor-based region proposal networks are computationally expensive to train. We propose F2DNet, a novel two-stage detection architecture which eliminates redundancy of current two-stage detectors by replacing the region proposal network with our focal detection network and bounding box head with our fast suppression head. We benchmark F2DNet on top pedestrian detection datasets, thoroughly compare it against the existing state-of-the-art detectors and conduct cross dataset evaluation to test the generalizability of our model to unseen data. Our F2DNet achieves 8.7%, 2.2%, and 6.1% MR-2 on City Persons, Caltech Pedestrian, and Euro City Person datasets respectively when trained on a single dataset and reaches 20.4% and 26.2% MR-2 in heavy occlusion setting of Caltech Pedestrian and City Persons datasets when using progressive fine-tunning. On top of that F2DNet have significantly lesser inference time compared to the current state-of-the-art. Code and trained models will be available at https://github.com/AbdulHannanKhan/F2DNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge